Connor Leahy on Dignity and Conjecture

Connor was the first guest of this podcast. In the last episode, we talked a lot about EleutherAI, a grassroot collective of researchers he co-founded, who open-sourced GPT-3 size models such as GPT-NeoX and GPT-J.

Since then, Connor co-founded Conjecture, a company aiming to make AGI safe through scalable AI Alignment research. One of the goals of Conjecture is to reach a fundamental understanding of the internal mechanisms of current deep learning models using interpretability techniques.

In this episode, we go through the famous AI Alignment compass memes, discuss Connor’s inside views about AI progress, how he approaches AGI forecasting, his takes on Eliezer Yudkowsky’s “Die With Dignity” heuristic to work on AI Alignment, common misconceptions about EleutherAI, and why you should consider funding his new company Conjecture.

(Note: Our conversation is 3h long, so feel free to click on any sub-topic of your liking in the Outline below, and you can then come back to the outline by clicking on the arrow ⬆)

Contents

- Intro

- AGI meme review

- Current AI Progress

- Defining Artificial General Intelligence

- AI Timelines

- Understanding Eliezer Yudkowsky

- EleutherAI

- Misconceptions About the History of Eleuther

- Why GPT3 Size Models Were Necessary

- Why Only State of The Art Models Matter

- EleutherAI Spread AI Alignment Memes

- EleutherAI Street Cred and the ML Community

- The EleutherAI Open Source Policy

- Current Projects and Future of EleutherAI

- Why Getting Things Done Was Hard at EleutherAI

- Conjecture

- How Conjecture Started

- EA Funding vs VC funding

- Early Days of Conjecture

- Short Timelines and Likely Doom

- Cooperation in the AGI Space

- Scaling Alignment Research to get Alignment Breakthroughs

- To Roll High Roll Many Dices

- The Epistemologist at Conjecture

- What Economics Can Teach Us About Alignment

- What the First Solutions to Alignment Might Look Like

- Interpretability Work at Conjecture

- Conceptual Work at Conjecture and The Incubator

- Why Non Disclosure By Default

- Keeping Research Secret with Zero Social Capital Cost

- Remote Work Policy and Culture

- A Focus on Large Language Models

- Why For Profit

- Products using GPT

- Building SaaS Products

- Generating Revenue In The Capitalist World

- The Wild Hiring at Conjecture

- Why SBF Should Invest in Conjecture

- Twitter Questions

- Conclusion

AGI Meme Review

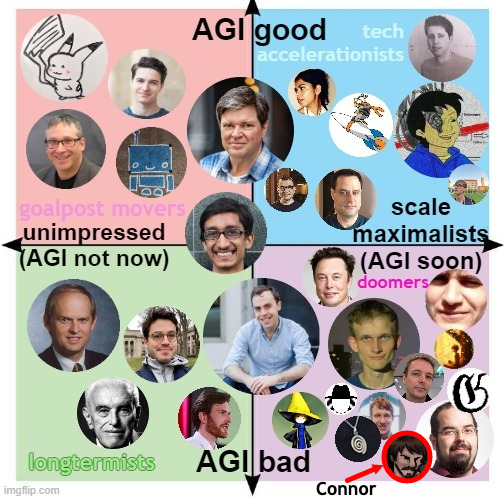

The 2x2 AGI Compass

Michaël: How did you react when you saw this meme? Did you find it somehow represent your views?

Connor: My first reaction to this meme was, hey, I’m in good company. So yeah, I think it’s pretty close to where I would place myself. Not exactly. I don’t think anyone could be more AGI bad than Eliezer, I think that is probably possible. Scale maximalism and AGI soon, I think are not the same thing. I think I may be more scale maximalist than Yudkowsky but I’m not sure where we both rank on AGI soon-ish because I don’t really know his timelines of that well. So my original reaction to this was I should probably be where Gwern is on this.

Michaël: Right, because you’re both bullish on scale.

Connor: Yes, I’m pretty bullish on scale on many things. Happy to talk about exactly what I mean by that. And I am not as pessimistic as Eliezer but I’m pretty close ⬆

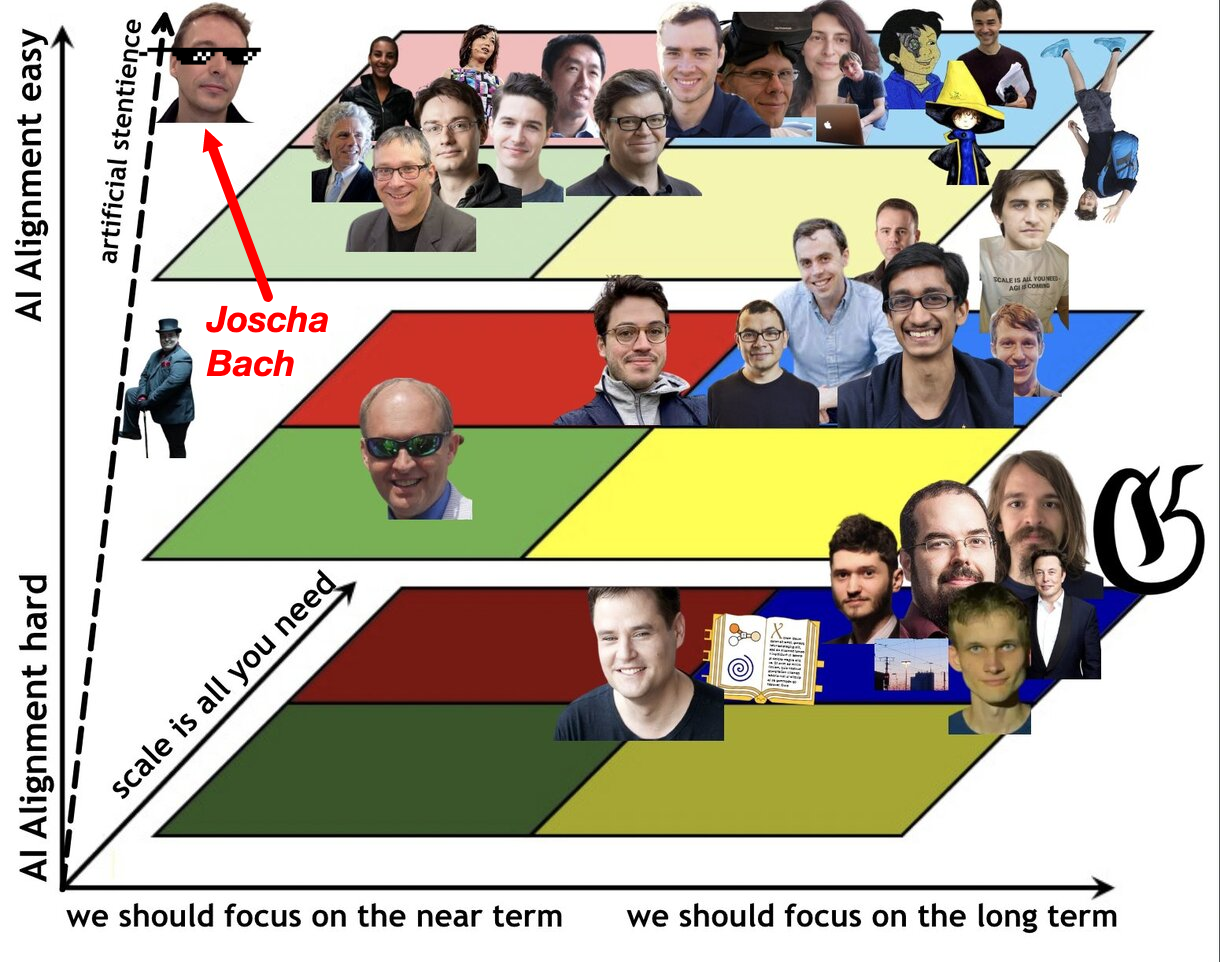

The 6x6 AGI Compass

Michaël: So I made another one after the reactions from this one where I had this quote where you said that you’re maybe more scale-pilled than Eliezer and less doomerish than him.

Michaël: So now you ended up being in this bottom right quadrant. Did you have another reaction from this one or is it basically the same thing?

Connor: My first reaction was fuck yeah, I got the cool corner, but the second reaction was Eliezer deserves the corner because of course in every political compass, your eyes first go to the most extreme corners. Of course those are where usually insane people are, but you’re looking in a political compass, you know you want to see the crazy takes. And I feel like as much as I appreciate my positioning here, my takes are marginally milder than at Eliezer’s.

Michaël: In terms of pessimism, yes. But in terms of scale pilled-

Connor: Yes.

Michaël: He doesn’t talk about scale so much.

Connor: So yeah, we could talk about scaling a bit. So it’s not that I literally think that a arbitrarily large or a large MLP is going to suddenly magically be AGI, that’s not exactly what I believe, but I think that almost all the problems that people on Twitter pontificate about is unsolved by deep learning will be solved by scale. They say, well, it can’t do this kind of reasoning or this kind of reason, it can’t do this symbolic manipulation or, oh, it fails at parentheses or whatever. I’m like, just stack more layers lol. I think there are some things that may not be solved just by scale, at least not by normal amount scaling. Okay, if you scale the thing to the size of a Jupiter brain or something, maybe a feat forward, MLP is enough. But there’s a few other things I think that are necessary but all of those are like pretty easy.

Connor: So like a few years ago, when I was like, when I take a piece of paper and I would sketch out, how would I build an AGI? There would always be several boxes labeled as magic where it’s like, I don’t know how this would actually happen. Those have not all been filled. There’s no more boxes where I’m like, I have no idea how to even approach this in principle. There are several of these boxes where I’m like, okay, this seems difficult or current methods don’t perform that well on this, but none of them are fundamentally magic and scaling is what filled in most of those boxes.

Michaël: Do you remember what were in those boxes?

Connor: If I knew how to build an AGI, I probably wouldn’t say in the public podcast, would I? ⬆

The 4d Compass

Michaël: Right. Huh, then there was this, I guess this is kind of the similar one, but in 3D.

Connor: That’s the big brain, so it’s in 4D.

Michaël: This is the big brain meme. This explains the other one because the other one is like a projection using something like PCA and there’s this… I guess the only one I’m not sure about is your slightly conscious axis.

Connor: I mean, that’s just a shitpost axis, right?

Michaël: Right.

Connor: I’m pretty sure Joscha was shitposting on that thread about what he believes. I don’t know Joscha that well but-

Michaël: I believe he is maybe more a computationalist in terms of consciousness than other people. You might believe that there’s a program might be conscious where-

Connor: I think Eliezer is very computational. I’m very computationalist. I’m more uncertain about that, but it seems to me that computationalism is the default assumption. I’m not saying there’s huge problems with computationalism, but I don’t see any other that doesn’t have equally if not larger problems.

Michaël: Do you believe that the large language model could be conscious?

Connor: Depending on what you mean the way we’re conscious. Conscious is what Marvin Minsky called the suitcase word. You going to have two people both use the word conscious and mean something that has absolutely nothing to do with each other. So some people, when they say conscious, they mean has attention or has memory or something like that. While other people have some ideas about self reflectivity or have ideas about emotions or whatever.

Connor: So consciousness is an unresolved pointer. You have to do a reference to pointer before you can actually talk about the question. There are certain definitions of the word consciousness that language models obviously have. They can reason semantically. If your definition of consciousness is can write a decent fiction story, then yes they’re conscious. But is that the best definition? Probably not. That’s not what we usually mean by that. So the theory of consciousness that I take most seriously are the simple, just like illusionist slash attention schema, like Grazianio or whatever his name, is type theories where we described consciousness is just like an emergent, just what it feels like to be a planning algorithm from the inside. That’s my default assumption. It’s just, there’s no mystery here, this is just what it feels like to be a planning algorithm.

Connor: There’s no mystery, there’s no hard problem. That’s just how it is. And whether these systems have experience is just not really a question. There’s no mystery here, there’s just the physics, there’s nothing more.

Michaël: I think for me, the main difference with how human experience consciousness would be that for us is more like a physical continuum where you can only literally interrupt our computation, except from maybe going to sleep would count as stop being continuous for a bit.

Connor: I sometimes lose consciousness when I’m listening to music or I’m driving a car or something like that. Sometimes things be like oh, I’m home, how did this happen? So I think talking about consciousness is a great example of an excellent way to waste all of your time and energy and make absolutely zero project progress in any problem that matters. If anyone brings in consciousness in conversation, I’m like, okay, so we’re not making progress to AI.

Michaël: Right. So we’re not making progress on AI, but at some point we’re going to get AGI or very smart models and we’re going to take moral decisions as to whether we can turn them off.

Connor: So we have to talk about is and ought, so the problem with the word consciousness is that on the one hand, it’s an is. Some people will generally say consciousness is a property that certain systems have or don’t have. But then also there’s an ought, is that consciousness is the systems, is the property that gives them moral weight. So I think there’s perfectly good arguments to be made that for example, animals or young children don’t have consciousness in the same way as adults do.

Connor: I’m not saying agree or disagree with this. I’m just saying there are perfectly coherent arguments to be made. And now you could argue, does that mean that they don’t have rights? Does it mean I can torture them? I don’t know. I’m saying probably not.

Michaël: They have less voting rights.

Connor: Yes they do and you could argue as consciousness as the relevant factor there or not. I don’t know, you can define it that way. I think it’s a much better way to kind of try to separate these from the ought. It’s like, okay, let’s separate the conjugation complexity, or the computational properties or whatever properties the consciousness has. For example, QRI, my favorite mad scientists think a lot about what they call valence structuralism and quality of formalism. So they think that there is an objective fact about consciousness and valence and emotions, qualia and stuff like this. Are they correct?

Connor: Fuck if I know, but at least it’s something you can talk about or you can at least try to reason about. And then you have to make the additional moral argument. So for example, say I identify some structure that I think is related to valence. Valence is how good something feels. And let’s say, I identify so for example, QRI as a theory, which is the symmetry theory of valence. So I think the symmetry of neural processes is what valence is. That’s just one of theories they have. So let’s say this is true. Let’s say can, well, it’s true. Let’s say I study this thing, I show in every brain study. I study all the brains. I look at everything and whenever there’s like symmetry, high valence is reported, whatever right? Cool.

Connor: Now I have a theory and now I have to additionally make the claim, this is something that’s morally valuable. We should for example, maximize valence for example. And then this valence cashes out physically as symmetry. This then has some really weird consequences. Since for example, black holes are very symmetrical. So maybe being a black hole is the most awesome thing in the world and we should just collapse the universe into a black hole switches. I’m not saying that this is a position anyone endorses, I’m just saying it’s really hard and we’re extraordinarily confused about this. I don’t think any philosopher, any cognitive scientist, anyone anywhere has any remotely close to a satisfying answer to these kinds of questions. It’s not clear to me if there are satisfying answers.

Connor: I think it’s something people should think more seriously about. It’s something that I think should be investigated in whatever means necessary. But I do not think this is something that is in the same kind of epistemological category as the kind of work we do in AI. So I’m not saying these are not important questions, I expect these questions to be become extraordinarily pressing in the near future, but they’re kind of like a different epistemic category. They require different kinds of epistemic inquiry than you might do when you’re admiral layers hole. So we could talk about the philosophy and valence and stuff like this for hours or whatever. Probably get absolutely nowhere, except I don’t know, high?

Michaël: But we are in California, so this is the time. So yeah, let’s stop this consciousness debate here. I just wanted to get your thoughts on the ins and out and now we talked about it. ⬆

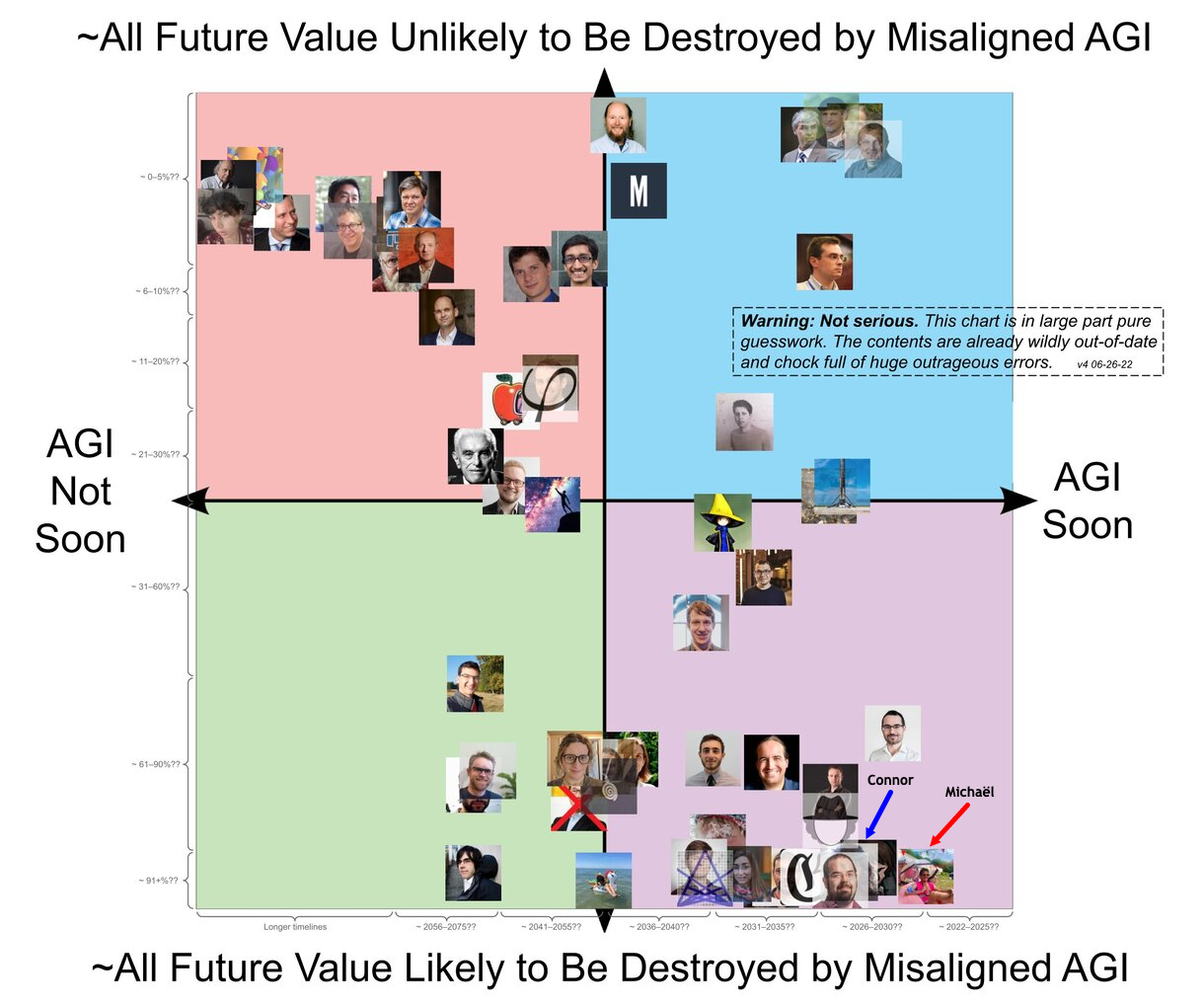

The Rob Bensinger Compass

Michaël: Now, the last meme for us will be like the one from Rob Bensinger, which is more close to people’s views. And there’s a bit more people here and the axes are bit different is AGI not soon and AGI soon. And then, all future values unlikely to be destroyed by misalign AI, which is maybe more what we care about. I don’t know if I asked you before your position, but I guess we are both below right corner. And there’s now a scale with years and you didn’t ask me for the years. I think that the years are for me, but yeah, what do you think of this? Do you have any thoughts?

Connor: Yeah, I think this is pretty accurate. I think this is pretty accurate to my kind of beliefs. I’m not sure what dates do you put in? Oh yeah, that sounds about right. Yeah, those those look about right for me, for my timelines.

Michaël: I think it’s 2026-2030 for you.

Connor: Yeah, yeah. That seems about right for my kind of thinking and yeah, I expect… So I think the main disagreement between me and Eliezer is not so much a disagreement or more than just, I am much more uncertain than him. I do not know how a human can get to the level of epistemic confidence he seems to have. So I don’t disagree with Eliezer really, some things. I don’t disagree with most of his arguments about why alignment is hard. It’s more just, I’m less certain than him. I have a greater variance that I could just be wrong about something.

Connor: So that’s why I’m by default and less pessimistic than him. But if I was as confident as him, I would probably be close to as pessimistic, not exactly but close.

Michaël: Right, so you’re saying that basically you could be at 99% probability of doom, but have like five or 10% error bars.

Connor: Yes, that’s, that’s kind of my thinking.

Current AI Progress

Michaël: Right and in terms of timelines, I remember you talking on Discord or in the last episode that… Yeah, you had pretty short timelines and even when we got some breakthroughs, in deep learning, you still had those short timelines. So you didn’t update much from those. So I’m really curious because in the past two weeks we had Minerva that could do math and multitask understanding. So it got 50% accuracy on math, which Jacobs Center predicted would happen by 2025. Did that update for timeline by anything? ⬆

Just Update All The Way Bro

Connor: Not really. I mean, maybe very mildly just because every small bit of information should update you some amount. But so basically… So yeah, we’ve had these big release in the last two weeks and over the last two months, the Metaco’s prediction for when AGI come has dropped by six months each twice within one month. So when you look at something like this, you can do what the rationalists, I believe refer to as a pro gamer move. And if you seize a probability distribution only ever updates in one direction, just do the whole update instead of waiting for the predictable evidence to come, just update all the way bro. Just do the whole thing. And this is basically what happened to me back in 2020.

Connor: So I updated hard on GPT-3, so I was already updating on GPT-2. My biggest update, I think on timelines was the release of GPT-2, a hundred million parameters. That was the really, really terrible model that could barely string together a coherent sentence. And I remember seeing that for the first time and I was just like, holy shit, this is amazing. This is the most amazing thing I’ve ever seen in my life. And a lot of other people didn’t update as strongly as I did on that. But I was already like, holy shit. We’re actually going to see AGI dudes. This is actually crazy. I didn’t update all the way to super short timelines. I was still like, I don’t know, 2045 at that time or something.

Connor: But then when GPT-3 came out and especially because it didn’t need new techniques, they just scaled the model larger. It makes such a huge difference, that’s when I started to really update. And so that’s when I was was started EleutherAI because I was like, oh, holy shit, I’m updating hard on this, I’m pivoting. We need to work on this. After GPT-2, I was kind of like, this is probably the end of the road. I’m going to work on something different for a while and now I’m kind of burnt out on this language model stuff. And GPT-3 came out like, nope, back, I’m coming back. I’m back boys. So I updated very hard. I pivoted back to this and I’m like, okay, look, it’s hard sometimes hindsight bias to see how things were. ⬆

EleutherAI Early Discoveries

Connor: But two years ago, things were quite different, especially in the alignment community. People weren’t really taking scaling and language models nearly as seriously as me and my friends were back in 2020. And so with the EleutherAI, we were one of the first groups that kind of took these super, super serious. We’re not the only ones, there were lots of groups like OpenAI and the later Anthropic people and stuff like… Th were already thinking in this regard, they were ahead of us. But we were some of the early people in the wider alignment community to update really hard on this and really shift our behavior in response to this. So basically over the first year or two of EleutherAI, we basically build so much tacit knowledge about these models.

Connor: We read every blog post, we played with models every day. We tried to build our own, we trained them, we see how they work. We thought seriously about them, we blog post. We thought about it, every limits and stuff that we basically had a speed run of updates that took other people two or three years to do. So the update that Minerva gave other people I already had in late 2020 basically. So and since then I haven’t had to update very much. It’s like Gato, not surprising, not at all surprising. I’m surprised we took that long. Like the the chain of thought prompts were not all surprising. That’s something I knew about like 6 to 12 months before anyone else in the world knew about it.

Connor: That’s one of the things I’m actually quite proud of that EleutherAI. We found out that those worked.

Michaël: So did you find the “less think step by step”?

Connor: Yes, like that kind of stuff. It wasn’t exactly that prompt, but this type of technique, we actually discovered before anyone else did, and we kept a secret because we were like, holy shit, this is really scary. And so that’s one of the things we then didn’t publish until after other people started publishing about it. And then we publish our draft about it, which we’ve had, we’ve been sitting on for like six months. So a lot of the things that people are discovering now we discovered at EleutherAI within like the first 12 months. So I have been running that update ever since. And so there hasn’t been much stuff that’s been updating me. ⬆

Hardware Scaling and H100

Connor: The only updates I’ve been having are hardware progress. It seems like the H-100s are quite a bit better than I expected them to be, which is bit spooky.

Michaël: Do you know what makes them much better?

Connor: Just the performance numbers, I’ve haven’t used them myself, but that I’ve heard in such, seem really quite impressive. And I have heard rumors about how much money corporations starting to spend on these things. And I’m like, oh shit. You think you’ve seen scaling, H-100 were just the warm up.

Michaël: So we’re going to see billions of dollars spent on H-100s?

Connor: I expect that, yeah.

Michaël: Okay so why what you’ve just said about scaling resonates with me about a meme I did recently about the Chad scale maximalists who didn’t perform any Bayesian updates since 2020, because he read this like neurons can’t lose paper, log-log plots, and then okay, I got it.

Connor: Yep, that’s sort of what happened. I mean, there was more tacit knowledge, there was more experimentation than that, but yeah basically. If you look hard enough at the log-log plots and you just kind of, and you look at hardware scaling and you have a bit of experience with how these models perform yourself, you do some experiments, you see your prompt improve performance and stuff. As Eliezer likes to say, sometimes the empty string should convince you, but here’s some additional bits. ⬆

DALLE2

Michaël: Thanks for add additional bits. Most people look at models through Twitter. They look at cool outputs and yeah, I guess the most impressive models so far have been Dalle-2, Imagen and Parti. So they can show their understanding by just throwing like cool pictures. Were you impressed by those at all?

Connor: Dalle-2 was a mile. I mean, it wasn’t really an update because I don’t think it’s that important for timelines. So EleutherAI was also very early on art models. So we were some of the first people to be dicking around with click guided diffusion and stuff like that. So I basically already had an update back then as well. We had a pretty early update where I looked at how small these models were and how good they already performed. And they’re like, just log-log plot, bro. But yeah, so I already had a pretty early update in the early models. I knew this was coming. Dalle was better than I expected in the coherence sense. I can’t stress this enough for people who haven’t tried Dalle.

Connor: It is actually amazing. Dalle is one of the clear… For me GPT-3 was the obvious. Like okay, how can you look at this and not be like, holy shit, takeoff is around the corner. But Dalle is so understandable. GPT-3, I understand if people can confused by it or GPT-3 is kind of confusing. It’s kind of hard to understand what it’s actually doing and how it fails and stuff. But Dolly, we have a bunch of Dolly art hanging around the office actually and it’s just incredible. It’s coherent, it can compose objects. It can do seem like yeah, sure, sometimes it’ll fail at some reasoning task. You say, draw three red blocks and it’ll draw four, okay, sure, it’s not perfect, but… ⬆

The Horror Movie Heuristic

A good heuristic I like to use to think about when we should be maybe taking something seriously or not is imagine you were the protagonist in a sci-fi horror movie, when would the audience be screaming at you? And I’m pretty sure with like GPT-2, GPT-3, Dalle and Imagen, this is a horror movie, right?

Connor: And you have the scientist in the lab and they click a button and then and draw this thing and draws and draw yourself and it’s like a robot, looking at them and they’re like, oh yeah, this isn’t interesting. You would be yelling at the screen at this point in time. Sure you can always post hoc or he’s like, oh, it’s just a neural network. Oh, it’s just combining previous concepts. Bitch please! Okay, what else do you want? What do you want? There’s a great meme I remember somewhere. Whereas the year is 2040, terminators are destroying the world, whatever. And Gary Mark is in the ruins of MIT, but they’re not using symbolic methods.

Michaël: They’re only using deep learning.

Connor: It’s not true intelligence, he says before he dissolves into nanobots.

Michaël: Yeah, so we are basically in the movi “Don’t Look Up” where there’s an asteroid coming and every time we react to something, “oh, it’s not very smart”, “Oh, you cannot put a red cube on top of a blue cube”, we’re basically like the journalists in the movie “Don’t Look Up” where there’s this asteroid coming and be like, “oh yeah, just an asteroid, we can just deflect it”. And the audience is just watching the movie and they’re like, “what the fuck guys, do something about the asteroid, do something”. We’re just screaming at the movie.

Connor: Yep, I haven’t seen that movie, but yes that’s kind of… Sometimes I feel this way. I think there are reasonable things to be skeptical about and such whatever, but also do the calculus people. To all the ML professors out there who don’t take these kind of risks very seriously. You’re smart people, you understand probability. Consider the possibility here that there are multiple options here and the options of the not good outcomes are not trivial. I like Stuart Russell, I think said this. It seems a very shocking amount of AI professionals never ask themselves the question, what if we succeed? What if just everything works exactly as planned? You know, you want to write AGI that can do anything human does and you just do exactly that. What then? So some people I think will answer something like, well, the AI will just love us or will just do what we want it to do.

Connor: And I’m like, oh God, that’s mm…. We can talk about that later, if you want to into some technical details about alignment and why expect I this problem to be hard. As you said, in the scale, on the graph. I’m pretty scale maximalist I expect AGI to not to be too hard and to come soon and I expect alignment to be hard.

Michaël: Yeah, let’s talk about AI alignment later, but I guess the thing about what if we succeed, some people definitions of succeeding is making this stuff do what we want them to do. Right. So for them, alignment is part of the problem.

Connor: That’s fair enough, that’s a fair kind of point. And I do think some people do think about it that way on. I don’t think many people think of it very explicitly. I think a lot of people kind of just focus on making number go brurr. Just improve benchmark performance, just make us smarter and such. And there’s a common meme. We’re kind of like, well, an intelligence system will just know what we want. And I think there’s very strong reasons to believe that is not trivial true. There are versions of this that might be true but the default version I do not expect to be true.

Michaël: Yeah. I think some people take alignment by default to be pretty likely and I think for both of us is pretty unlikely. Just to finish on the scaling part, did EleutherAI discover Chinchilla or something similar or was this a new law that you discovered?

Connor: We did not discover the Chinchilla law. I’m aware of multiple groups that did, so DeepMind weren’t the only ones who discovered this, multiple other groups did as well, but we were not one of them.

Defining Artificial General Intelligence

Michaël: Gotcha and on the memes that I mentioned, we talked a lot about AGI and I think to ground the discussion a little bit more, we should just maybe define it or just use a definition we both find useful to talk about it.

Connor: Yeah, so this is-

Michaël: Or we can ban this word as well.

Connor: No. Yeah, so, no, I think this is good. I think AGI is actually a good word.

Connor: So my favorite definition, I think is from a post from Steven Byrnes, which I think may not be posted yet. It may be posted by the time this episode goes out where he kind of like pushes back against Yann LeCun who argues that general AI doesn’t exist. So the way I think about the word AGI is there are definitions which are wrong in technicalities, but true in spirit. And there are those that are technically maybe you know, accurate but wrong in spirit. So I like the ones who are true in spirit, but every time I bring those up, some nitpick will be like, well actually, technically what you just said is incoherent. And I’m like, okay, sure. I’m aware of this. Finding a real technical definition is extremely hard and almost always missing something. So for the purpose of this discussion, I think is more productive if we use a definition, which is not 100% technically rigorous, but brings across more of the spiritual correctness

Michaël: The vibe.

Connor: The vibe. This is what the zoomers say I guess.

Michaël: We are in California.

Connor: We’re in California, true, true. I have to adapt to the culture more but yeah. ⬆

The Median Human Definition

Connor: So I think the obvious definition, a lot of people use, something and a system that can do anything a human can do as good or better. I think that’s a pretty reasonable… And not just one individual human, a system that can do any task, any human can do as good or better than a median human could, depending on which definition you want to use.

Michaël: Right, so it should be able to use a computer and go on the internet, but not code Ais.

Connor: Not necessarily, so I don’t like this definition for a few reasons. I’m sorry, I’m giving such an annoying answer, but I think this is actually kind of important to talk about. I’m going to give you basically four definitions or something, which are all right wrong but they’re all right in different directions. And if you kind of take the intersection or the union, you get something which kind of makes sense.

Connor: … take the intersection or the union, you get something which kind of makes sense. So this first one is pretty good, because it points out that we care about a thing that does things. So I don’t, for example, think it makes sense to define AGI by its architecture or by its internal calculations or how big it is or something like that.

Connor: I don’t think that’s really what we care about. So some people try to define AGI like it can’t be true intelligence if it doesn’t use method X. I don’t think that’s a good definition, because what we care about is does it do the thing or not? And then we can argue about what the thing is. So the reason this is not a good definition is because I don’t think it captures what I’m most concerned about.

Connor: So for example, I very much think there could be AGIs, that can hack any computer on the planet. That can develop nanotechnology. That can fly to Jupiter or whatever. But can’t catch a ball, because they just don’t put their computation into that.

Michaël: All right.

Connor: And then someone could say, “Aha, it’s not general. It’s actually narrow intelligence” And I’m like, “Okay, fine. Sure. But that’s really missing the point.”

Michaël: Thanks Gary Marcus. ⬆

The Not Chimpanzee Definition

Connor: Yes. So a different definition that also is not not correct. But I get to another nuances I like, which is one that I think Eliezer has pointed out in the past is, a thing that has the thing that humans do and chimps don’t. So chimps don’t go to the moon. They don’t go a 10th as far to the moon and then crash or something. They don’t go to the moon. They don’t build industrial society.

Connor: They don’t generalize to all these domains. They don’t develop biotechnology and computers and whatever. There is something that humans have that no other animal on the planet has. A lot of animals have various bits and pieces of it. It’s clear that chimpanzees use tools and they can have a very primitive sort of proto language, not really a language, but they have different grunts that different things and stuff and they can reason socially about each other.

Connor: I actually really recommend any AI research out there, read a book about chimpanzee behavior. It’s incredible how many people will make statements about how… And not just AI research, it’s also like philosophers of mind make statements about how animals clearly aren’t intelligent, because they can’t do X, Y, Z. And then you just read a book from an actual biologist that has worked with these animals. You’re like, clearly these animals do X, Y, and Z.

Connor: Chimpanzees obviously have theory of mind of each other. They can obviously reason about not just what are the other Chimp currently doing, but what does the other Chimp know about what I am doing? This has been shown in experiments. It’s very clear that chimps can do stuff like this. But chimps don’t go to Mars or the moon. Why not? So there’s something… Some general kind of capacity, which may be relatively simple.

Connor: So they might be a relatively simply arithmetic core, to the kind of general reasoning that humans do, which, again, some like… or Yann LeCun Schmidhuber might be bursting through my door right now like, “Well actually, not truly general because no free lunch or whatever.” Right? And like, Okay, sure, fine. Humans suck at theorem improving or whatever. Right? But we can build computers. So does it really matter? ⬆

The Tool Inventor Definition

Connor: So the definition I really like, which is this definition that Steve uses in this post, which may or may not be released, is he points out that some people say, well, like anything I would say this, and by the way, I am very sorry to anyone. I am misrepresenting you. Sorry Yan. Sorry Jürgen. I’m sorry if I’m misrepresenting any of you, guys not my intention.

Connor: But my understanding is they will say something, well, they’ll never be an agent that is, can do biotech and code AIs and catch a ball, and do blah, blah, blah. That’s computationally impossible. You’d be such going. I think that is true. In the limit, of course you can always find like, “oh, well it can solve NP hard problems can it?” And like, “yeah.” “Okay, sure, fine.” But what makes humans the best at solving protein folding is not because our brain is evolved to do protein folding calculations.

Connor: It’s because we invented AlphaFold. And I think that is at the core of what general, what AGI should mean. So AGI may or may not be good at protein folding, but it is capable of inventing AlphaFold. It’s incapable of inventing these kind of tools. Is it capable? It might not be good at doing orbital mechanics, but it can write a physics simulator and build a rocket. So I think that’s a good definition we should use-

Michaël: I think-

Connor: Which is not very strict. But… ⬆

Just Run Multiple Copies

Michaël: I think the main difference between humans and one AI agent, which is… Is that, genuinely, we are a bunch of humans on earth and we die. Some others come around and just invent new things. And we read books. So we’re not one agent. It’s more like billion agents doing stuff. And so when we create AlphaFold, it’s a team of people doing AlphaFold and all the other science we did before. If we program an AI, we train it on some data. Then we test it. It might invent protein folding, but not another thing. So it’ll need other iteration or at least be able to interact in the environment. Not just something that, it’s static and just like it’s trained and then tested. So it needs to… I guess that’s the main steel man I get from those guys. It’s just they think it’s impossible to do something very general that can learn any kind of problem solving.

Connor: Yeah. And I think basically there’s a failure of imagination here, is that they are pattern matching to how current AI systems look. They say AlphaFold, which is trained on one data set and it does one task.

Connor: But there is at least one existence proof of a system, which is trained on just a bunch of garbage just for the real world. And learns how to do all these things, which is humans. And you can say, “well, sure there’s multiple humans, whatever”. Yeah, that’s just run multiple copies, Lmao, or just build a bigger brain. The fact it takes 10 humans to develop AlphaFold or something’s just an implementation glitch in humans.

Connor: If we could just build a 10 times larger, single human or just have a giga von Neumann, he would just invent himself. He would need the team. He would need the other people. It might take him some amount of time. But if anything, the fact that humans have separate or separate entities and not just one giga-brain is just like an implementation flaw in the intelligence sense. It’s pretty clear to me that if you could just somehow pull all the compute, that would be better kind of strictly so-

Michaël: Right. And AI could just copy itself.

Connor: Yeah.

Michaël: And so the first definition is something like, what any median human could do? The second definition is what is different between a chimpanzee and a human basically like the different level of generality between a chimpanzee and a human?

Connor: Yeah.

Michaël: What are the third and fourth, if you still remember?

Connor: So the third was basically this Steve’s definition, which is a thing that can invent tools, which is kind of overlapping the second one.

Michaël: Okay.

Connor: And I do not remember what my fourth example was. I think it was that, it can do all economically relevant task or something, but I was actually going to bring that as an example of a bad definition, but I think we’re going to talk about that later. ⬆

AI Timelines

When Will We Get AGI

Michaël: Got it. Sure. We can talk about it later. So yeah. So just taking those definitions, just maybe the convex envelope of those, three or four definitions, when do you think we will get AGI? That’s a very controversial question. You can just give me very large intervals.

Connor: I mean, obviously the answer is, I don’t know.

Michaël: Right.

Connor: I have various intuitions or various inside views and various outside views on this is on. And these numbers might shift depending on what time of day you ask me or whatever. But generally kind of the mean answer I give people is, 20 to 30% in the next five years. 50% by 2030, 99% by 2100, 1% had already happened.

Michaël: It already happened, in some lab already?

Connor: Yeah. We just haven’t realized it yet.

Michaël: I can buy it.

Connor: I mean, that’s obviously a meme answer and I don’t read too much into that.

Michaël: No, no. But I could buy a world where we’ve already invented AGI and it just hidden…

Connor: Yeah, we’re just… We don’t realize it. We’re just staring at it and we’re like “Hmm, this thing seems weird.” But we don’t realize

Michaël: Oh, you mean we’re in a simulation?

Connor: No, no, no. It’s just… I mean, that’s also an option, but let’s not get into that bull shit. No, just like someone invented GPT-4 or something and they’re like, “mm-hmm. I mean, seems cute.” While in the background it’s doing something crazy and just like, “no-“

Michaël: Oh. Okay. Kind of a switch is turned- ⬆

Deception Might Happen Before Recursive Self Improvement

Connor: It’s like hiding yourself so we can. Yeah. Like a switch just turned is like already happened and it’s already in motion and we just are not all going to realize it until something crazy happens. Don’t read too much into that. I don’t… it’s mostly mean that. I say that just to raise the hypothesis to people’s attention. My error bars on these are all relatively large, but I take it. I do what is very important is I take it completely seriously, that we could have full on AGI in five years. I think that is an absolutely realistic possibility that we should all take completely seriously.

Michaël: Yeah. I agree that people don’t talk about it enough. And I think for me, I guess the main crux or the most important factor here is will this AGI thing be able to self-improve and become an even smarter version of itself very fast? So for me, I think the one concept that is more important is recursively self-improving AI could be a very smart version of copilot that creates copilot 2, or… Yeah. Do you have any definition for this, or even because I guess technically an AI could code another AI and we don’t have a very strong metric of what it means to improve itself. So is this something you’re think about or you consider when you think about those five years?

Connor: So I don’t think splitting on the axis of recursively self-improving versus not is a natural way to split things. I definitely agree if we have a system that’s like clearly self-improving, we’re probably fucked. But I think it is insufficient, but not necessary. Basically. I think also it’s not clearly defined, I think there’s lots of intermediate steps or for example, you have a system which may not be technically modifying its code, but maybe it kind of gradient hack, like it can change its training data.

Connor: It can train its internal gradients or maybe it can copy itself to more GPUs or something like that. So a pretty obvious example I could imagine happening something like… Okay, we have some system, it runs on… I don’t know ten A-100’s and it’s as smart as John von Neumann or whatever. Right? Or whatever, it doesn’t matter. And then… Or let’s give just an example. We have a logarithm that if run on A-100s is the smartest jump but running on 10, like some grad student. Right? And he looks saying like, “oh shit, this seems kind of stupid.

Connor: “ Well, I’m about to head in for the night. I’ll just run it on the big cluster and I’ll check in tomorrow. And then he runs in a thousand, A-100s and then it suddenly becomes giga von Neumann. And then before you even realize who knows what kind of things these things will learn or how it would act or whatever, it’s, I think a very important part of my model of thinking about AGI, is that there’s nothing inherently special about the point in intelligence axes of human.

Connor: It’s like we have z axis. We are of intelligence. And at some point we have median humans at some point we have John Newman or whatever. And there’s no reason that as we increasingly go from slug to bug to rat or whatever that as this continues, we naturally halt at the human thing. I think the difference between median human and John Von Neuman in the scheme of things is smaller than the difference between a reptile and a rat on whatever the scale means. So it’s very likely that if we have something that’s smart as a Chimp or something and, or as smart as a rat and we’re like, “looks lame as hell.” And then we run on a big computer.

Connor: It won’t stop at John von Neumann. It will just shoot right past that into some crazy regime that we don’t know what that would even look like or do. This is guaranteed to happen, of course not, look like no predictions are hard to make, especially about the future. Maybe this won’t happen. I don’t know, man, but this seems like the default, the null hypothesis. We don’t have any reason to not think this would happen. So we should just entertain the hypothesis that this is the default thing that will happen, because we just don’t have a good theory of how intelligence scales.

Connor: We have those scaling laws buts don’t really tell you that much. They tell you loss. But if I give you a loss number my model is 2.01, how good is it at math? The information isn’t contained in that number? Or, will it do a treacherous turn? It has lost 1.4 and you’re like, “this doesn’t mean anything. And it’s the kind of the things we’ve observed where even so the scaling of the losses are very smooth. The scaling on benchmarks can often be pretty discontinuous. It’s just like it has 0, 0, 0, 0, 0, 90%.

Michaël: Right. The performance on downstream task are yes. Discontinues. And I think, yeah, so you’re basically saying that, copying yourself on another server could be a convergent instrumental goal for an AI. And that might happen before you get… before you’re able to self improve?

Connor: It doesn’t even have to be the AI doing it. It could just be the researcher testing some new architecture or something. And then his test on is local machine. It doesn’t really perform that well. Or it seems totally harmless. And then they scale it up to the whole OpenAI cluster or whatever. And then suddenly just does something crazy. But what I’m saying is, this is not… ⬆

We Should Not Rule Out Scenarios

Connor: I’m not saying this is going to happen definitely or something. And what I’m saying is we can’t rule this out. My whole, this is like one of the main things, I like to stress. It’s not, I’m not saying, I know this is how the world is going to end. This is how AGI is going to happen. This is when it’s going to happen.

Connor: What I’m saying is, “Hey, here’s a bunch of scenarios that we can’t rule out.” I’m not saying they will happen, but we can’t rule them out. And I feel like a lot of critics of these kind of positions, seem to have the unnecessarily confident. They might say, “I don’t expect that to happen, in most not my high probability.” And that’s fine if you say “I have a 10% probability or 1% probability,” these things happen. That’s okay. I can argue about that. But 0% you have to be really God damn confident for that to be the case. You have to have some really strong, theoretical reasons to disbelief the same way I take seriously that it might be that all these things just aren’t a problem.

Connor: It’s just like either deep learning stop scaling or we hit some other roadblock or alignment just turns it out too easy. I think I assign some probability that those are true. That might be the case. I can’t rule them out. I can’t rule out. That it turns out a line is just easy or that scaling breaks. I can’t rule these scenarios out. I don’t expect them, but I don’t rule them out. And I kind of think that, the critics of AI safety and such should do the same is they should just like, kind of say like, “Hey, I can’t rule out that these scenarios would happen. Even if I think they’re unlikely.

Michaël: So if they were less confident about it, they would give you maybe 10% and they would start working on AI and then-

Connor: Well, I think if you give 10% chance that potentially the whole world could be destroyed in the next five to 10 years by this thing. This seems like a reasonable thing that… Okay. Even if you don’t want to work on that, I think we could maybe agree that it’s the whole field of AI alignment is 200 people maybe. And it feels like a few more people could be working on this problem. Just as an outside view, say you’re an alien observing a primitive species on another planet and like, “Hmm, okay. They’ve identified the problem. They have 200 people working on iy.” Again. Would the audience be screaming at the screen right now?

Michaël: Yes.

Connor: It feels like, I don’t think it’s that obs… That crazy of a proposition that maybe a few more researchers should take this seriously or at least not shit talk about it on Twitter. I think this is a pretty reasonable thing.

Michaël: Yeah.

Connor: Correct me if I’m wrong.

Michaël: Right. And I think when we talk about, taking it seriously there… We have even among those 200 researchers, pretty different view. So some people are more closer to the Effective Altruism movement might be more optimistic than you or even people working at, let’s say Antropic, OpenAI can be as bullish as us on scale, but still believe that we have 9% chance of getting it right. ⬆

Short Timelines And Optimism

Michaël: That really doing a good job with alignment research and even 200 people is good enough. So one report that came in 2020 was Ajeya Cotra reports trying to estimate what she calls transformative AI. So we can talk about the definition of transformatives AI, but basically is something that will happen before AGI. It is pretty significant for our economies.

Michaël: And I guess that puts some anchor into people’s timelines and they thought like, “oh, okay, that might happen in Ajeya Cotra report.” So she gives those numbers maybe like 2040, 2050. And she did a good job of trying to estimate the things. So I’ll give it five years less of, or 10 years less. But having a big jump for Ajeya report is basically being overconfident. So yeah, I guess a bunch of people in AI are anchoring themselves around those estimates. Maybe they updated with recent progress, but they don’t think that there’s 20 to 40% chance of getting AGI in five years. So I think there’s maybe less pessimistic and less bullish.

Connor: I think we should separate people’s timelines from how hard they think AGI is. The Ajeya report is fundamentally about when we should expect Transformative AI, which is a certain definition of a AGI, which I used to really like, but I now actually kind of dislike. We can talk about why. It’s not really about how hard alignment is or how high the probability of doom is. But I think you definitely put that correctly, that a lot of people in the EA sphere are way more optimistic about me, about how hard alignment is going to be. I think part of that is because of longer timelines.

Connor: If I thought I had 20 years of time to work on this or a hundred years to work on this, I would be way more optimistic. But so there is a correlation here, between these two factors, but I do think they’re separate. There’s also, you have very short timelines, but are still very optimistic and they tend to work at OpenAI.

Michaël: Right. So they’re Co-related, but I just like to make a distinction between two times, two kind of people were either very bullish on AI, short timelines but optimistic and people were longer timelines and maybe a bit more optimistic because they have more time and those people are not as concerned as you probably, and I guess not working maybe as hard or there’s not trying high risk startups. So yeah. ⬆

The Ajeya Cotra Report and Transformative AI

Michaël: Do you have any other thoughts on this report? Because you said you agreed with it at the beginning and-

Connor: Yes.

Michaël: Then maybe you have some disagreements?

Connor: Yeah. So I have some thoughts in that report. So first of all, I wanted to point out that I think it’s great that it was done. I am so happy that someone just actually sat down and just did the work. That is that report is like “Jesus Christ, that must have been a lot of work to put that thing together.” The amount of effort put into really exhaustively looking into every point, really writing down everyone, every assumption, every calculation, justifying things truly this is, this is the kind of stuff I love about EA and rationalism.

Connor: It’s just this kind of just taking an idea, whether or not it’s crazy and just really running with it. Just really going through the whole thing. So big props to Ajeya and everyone else who was involved in that report. I’m really glad it was done. I do disagree with the conclusion still. And so first I have a few problems with the definition of Transformative AI as the kind of thing we should be concerned about.

Michaël: Can we just define terms of AI-

Connor: Yes.

Michaël: If you remember the definition.

Connor: Yeah. So I think the definition for transformative AI was something like a system that can perform any economically valuable task a human in front of a computer could do, or a remote worker could do or something. And I think it helps out some definition about a certain percentage of the economical work. 50% of economical work could be performed by this AI or something like that. I don’t remember the exact definition. Unfortunately,

Michaël: The thing is more about its consequences on how much it could influence the GDP growth rate.

Connor: Yeah.

Michaël: The total GDP growth rate.

Connor: Oh Yeah. Yeah. That’s similar to Paul’s definition of slow takeoff, where he talks about… And also Hanson’s to some degree. So it’s good that you bring that up because… ⬆

Against GDP as a Measure of AI Progress

Connor: So one very strong opinion I have is GDP is absolutely one of the worst possible measures for AGI progress. And there’s very, very good reasons. We should never use it. John Wentworth, I believe wrote a very good post about this, or maybe it was Daniel Kokotajlo.

Connor: It was one of the other against GDP as a measure for AI progress. And basically, so there’s multiple problems. The obvious problem, the first problem, is of course that GDP is slow. It wouldn’t. It would only measure things that are taking go over years or decades, slowly integrate itself into economy and such, but even more importantly, GDP doesn’t really measure what most people think it measures.

Connor: If you actually look how GDP is calculated, it’s in a way kind of set up rather perversely, in that it kind of is designed to measure the things that don’t grow. The way it’s set up is that’s, you can look at current world GDP is definitely higher than it was several years or a decade or so ago. But for example, Wikipedia has zero impact on world GDP. I think Wikipedia is one of the most valuable artifacts ever created by mankind. Literally one of the most valuable things ever made.

Connor: And it has literally $0 impact on world GDP because it’s not a product and there’s no exchange of services or anything like that. This applies to software, to open source to knowledge, the internet. There’s this Phantom GDP that people think should exist. There’s all this value created by like technologies by internet, by open source and all this kind of stuff, which is just not in GDP.

Connor: So you can have people with… Or countries with GDP today, that are low middle income or low income countries that have access to the whole internet. But are by GDP measures know better often in 1970s. And so clearly if we were thinking about a powerful rapidly emerging digital technology that will change how things work that will scale and extremely efficiently, this is a pretty clear and it… And GDP can’t even capture the difference between living in India, 1970 versus living in some other it’s a middle income country today with access to the whole internet.

Connor: Clearly this is the wrong measure to be using. So that’s the one that’s… I think the main point of that post it’s explained better in the post than I explained here, but that I find that quite convincing. There’s also a separate point, which Eliezer brings up. I think at a few places, which I find somewhat compelling. ⬆

The Gradual Takeoff Scenario

Michaël: Was it the post about why it is wrong to reason about biological anchors?

Connor: Yes. I think he brings it up in that one. I don’t exactly remember, there’s a lot of Eliezer posts. And the point he makes is basically that because of regulatory slowness, because of the slowness of economy to uptake new technologies and stuff. What we will probably see is that the first AGI or powerful AI system that we actually see is the first one, who’s overcome a threshold, to be able to circumvent these systems. So this is one of the ways I think very fast takeoff could happen in that there actually is a… So this is one of the things where both Paul and Eliezer can be right, in this scenario, in this scenario, we have a gradual takeoff.

Connor: So we have systems that gradually become stronger across, but because of government regulation, because of corporate skittishness because of conservatism nothing’s ever released, because they’re like “oh no, it’s like, we have to first review this for five years or 10 years or whatever.”

Connor: And then, so the public doesn’t see any of this. So there’s thing ticking along in the background that the public doesn’t see. And then at one moment we have the breakout where some powerful system that escapes onto the internet or that can manipulate politicians or whatever to make itself able to spread or whatever. And then it will look this happened overnight, even. So it didn’t actually happen overnight. This is already the case. It’s… we surround ourselves with AI people.

Connor: We talk about AI and AGI all the time, but if you talk to Uber driver, he’s like, “what’s AI?” “I’ve never heard that word before.” It’s for most of the world outside of our tech bubble, they don’t know what the fuck a DALLE is. They don’t know what they don’t know, what a GPT is. They don’t know what any of these things mean. So I think from the perspective of many average people, even if there is a slow takeoff, it may very well, resemble a very fast takeoff. And I find this argument moderately convincing.

Connor: I think that’s a… Seems like a reasonable scenario that could occur. I’m not saying it will, but it’s like, again, seems like a pretty reasonable scenario. ⬆

Is Transformative AI Useful

Connor: So for these kind of reasons, I think the definition of Transformative AI is kind of… It has the benefit of being coherent. This is a thing I can talk and reason about, but it has the problem that I don’t think this is the actual thing we should be worried or care about. And that, I expect by the time Transformative AI is technically created, it may already be way too late.

Michaël: Yeah. I think there’s a couple of distinctions that you made that are interesting. One is, not everything is in GDP and Wikipedia. Maybe indirectly, because we used Wikipedia to train some models.

Connor: Yeah. Very indirect. Wikipedia obviously produces unimaginable amounts of value on the whole world. If you could measure how much it improves people’s education and ability to think. And so on, I would not be surprised if we could measure this, if it was trillions of dollars, I would not be surprised.

Michaël: Right. It is just like, if we have a world without Wikipedia and the world with Wikipedia, the world with Wikipedia will have a higher GDP? I believe.

Connor: Maybe, but I don’t know if that would capture everything. I think it’s possible. I expect that to probably be the case, but I’m not sure if that actually captures what we mean by the value of GDP above Wikipedia. I mean, yeah. I’m not saying I have a strong model here, but clearly something’s wrong about the way we measure.

Michaël: Yeah. And then I think this points out what we care about for our models. So if we have good data and good information on the internet, that’s like, we are the point where we can like train AGI train something very smart. And even if you’re not directly captured by something economic value for humans, it’s valuable for, to train our models potentially.

Michaël: And the other distinction you made is being private progress, stuff that are, happening in products or stuff that are just cool demos on the internet like Dalle, I talked to my Uber driver about Dalle to point at something where humans could be automated. I tried to talk to my designer friends like, “oh, have you seen this? You can just type a few words and now you can get a good design,” and they have no idea. Right. So there’s people on the streets then there’s people in tech and then there’s the people working on the model. So let’s say OpenAI employees who know stuff we don’t know about.

Michaël: And possibly we could get… They could know that we’re close to a fast takeoff. And we have no idea because we didn’t have access to the four models. So yeah, those posts were 2020, 2021. Those were answers to AGI Contra report. ⬆

Understanding Eliezer Yudkowsky

Michaël: But now in 2022, we had another debate between Paul Christiano and Eliezer Yudkowsky, as you might be aware of. One, the first post was by Elizer Yudkowsky, called… I think the first post was Die With Dignity. There was a troll post in April, but administrative, it counts to the debate. ⬆

The Late 2021 MIRI Dialogues

Connor: So arguably things started with the MIRI dialogues, which were-

Michaël: Right.

Connor: … a series of long transcribed discussions between Eliezer and various other people. Then there was the Die with Dignity post, which I would actually… to talk about maybe briefly, because I think I have a very different take than most people do on that post. And then after that we had the AGI Ruin post by Eliezer where he just kind of listed a bunch of reasons why you think we’re fucked. And then Paul made a very thoughtful response where he kind of goes through it and says why he thinks Eliezer’s strong about these kind of things. And these are… All, these schemes, they feel like a kind of tie together. I feel like Dying with Dignity kind of parallel, but I think there’s a thread between the dialogues to the AGI Ruin to Paul’s response.

Michaël: Right.

Connor: Which is kind of where we are now, Nate Soares has started to also add his voice in, but yeah, that’s kind of where we are right now.

Michaël: So which one did you want to, what do you want to go first? So we could just summarize the discussion for the long debate between-

Connor: I think that’s… That may be physically impossible.

Michaël: Right. This is too long. This is too long. So maybe, okay. So-

Connor: I think I… Okay. So-

Michaël: May be your take on the Die With Dignity, you said you had some… your take on this

Connor: Yeah. I could talk about Die with Dignity part. But let’s focus first on the arguments or the dialogues and the AGI ruin. And then I’m going to talk about Die with Dignity. Cause I have a few things to say about that.

Michaël: Go for it. ⬆

Paul Christiano and Eliezer

Connor: So, something very interesting, I think was going on. So in the dialogues between Eliezer and Paul. So there’s these huge long discussions between Eliezer and various other people among others like Paul, Rohin Shah, Richard Ngo, some people from OpenPhil and such. And one thing that I noticed reading the dialogue, I read the whole thing. It’s 60,000 words or something, but I read, I read all of it. And was… I found myself having an interesting reoccurring response, is that everything that people who are not Eliezer say seems to be more reasonable, but for some reason I think Eliezer is right.

Connor: And this was a bit of a dissonance inside of my head while reading the whole thing. I was like, “I’m on Eliezer’s side, but I really can’t explain why, because it seems all the other people are being way more reasonable than him.” I think I’ve mostly resolved this confusion since then. So I think the apotheosis. So, if you a dear listener read any parts of this, I would recommend you read the AGI Ruin post followed by Paul’s response to it.

Connor: Because the AGI Ruin post was objectively quite bad. I think Eliezer’s… I think it literally says that in the post, this is a bad post. And I think Paul’s response is very good. I think he makes a very, very good response to it. Also, Evan Hubinger has a very good comment, responding to the AGI Ruin post, which is also very, very good.

Connor: And really they make just a much better case than Eliezer about how he’s way too pessimistic. He’s dismissing all these other things he’s way too over confident in his views of how progress is made in these fields or such. And ultimately, I think, for example, Paul’s post is just a much better post than Eliezer’s on every objective measure.

Michaël: I think you could even just read Paul’s post because he gives the main point he agrees with Eliezer, right?

Connor: Yes. I would read both. And so that whole buildup was building up to a but. And here comes the but. But, there’s… Eliezer is a very interesting writer. I’ve always found reading Eliezer to be very pleasant. Some people like him, some people dislike him, whatever. Right? But something about Eliezer’s writing has always been very appealing to me. It’s been very easy. It doesn’t cost me energy to read Eliezer’s stuff mostly except the psychic damage from reading his fan fictions…

Connor: Except the psychic damage from reading his fan fictions. But I think one of the keys to understanding why I like Eliezer stuff and some people, others dislike it is that he has a certain way of writing, which is often more, almost metaphorical or more like fables, almost. He often talks in dialogues. He often tries to write things in non-technical stuff. While for example, Paul, is much better at like saying very concrete, well, no, depending on concrete, but very technical things. Trying to make predictions, trying to ground things and betting. One of the most frustrating parts of the whole dialogue is when Paul just tries to get Eliezer to bet on literally anything. He was like, “Eliezer, pick anything you want. I’ll make a prediction on it and we can just take.” And he just resists it and resists it. Like, “Oh, for God’s sake, Eliezer. What the hell are you doing, man?” So that’s where I think really, most people turned against Eliezer on that.

Connor: Well, Paul was having a silver platter. “Look, Eliezer, anything you want, just bet on it. And I’ll bet on it as well.” And I think they eventually found one tiny thing to bet on, but it was a real painful process. And that didn’t make Eliezer look good. Here’s Mr. Bayes himself. He was always, “Oh, you should always make bets.” And here he is not making any bets. And I can tell that’s, I think, a very fair criticism of Eliezer, that he didn’t do that, that he should have made bets.

Michaël: I think they both didn’t find anything concrete to bet on.

Connor: Yes.

Michaël: So it is very easy to say, “Okay, give me a bet and then I’ll make a prediction.”

Connor: Yes. And the truth is that is very hard to do. So I didn’t update as negatively as other people did. I was like, “This is actually a very hard thing to do. This is hard, to find bets like this.” But also, I see in this sense, Paul was being more traditionally rationally virtuous in a sense. ⬆

Eliezer Wanted to convey an Antimeme

Connor: Here’s my hot take. And I don’t know if Eliezer agrees with this or not. Eliezer, if by some chance you ever listen and you disagree with me, I’m sorry.

Connor: But my interpretation, I think a lot of people were missing what was actually happening in these dialogues or why Eliezer was saying the things he was doing. Again, this is just my interpretation. It could be completely wrong here. But I think what was happening is, was that Paul and Eliezer were actually having two completely separate conversations in parallel. And a lot of people pointed this out, it seemed like there’s talking past each other. And I think the reason this happened is because they had two different goals. I think what Paul was trying to do is, he was trying to talk like a scientist, to have a scientific disagreement, to try to find cruxes and whatever.

Connor: I think Eliezer had a different goal. I think what he was trying to do is to convey an antimeme. So an antimeme is an idea that by its very nature, resists being known. It’s something that if I tell it to you, you’ll forget it or you’ll want to forget it. Or it will not properly integrate into a world model and you’ll get a garbled version of this.

Connor: Antimemes are completely real. There’s nothing supernatural about it. Most antimemes are just things that are boring. So things that are extraordinarily boring are antimemes because they just, by their nature of what they are, resist you remembering them. And there’s also a lot of antimemes in various kinds of sociological and psychological literature. So a lot of psychology literature, especially early psychology literature, which is often very wrong to be clear, psychoanalysis is just wrong about almost everything. But the writing style, the kind of thing these people I think are trying to do is they have some insight, which is an antimeme. And if you just tell someone an antimeme, it’ll just bounce off them. That’s the nature of an antimeme. So to convey an antimeme to people, you have to be very circuitous, often through fables, through stories you have, through vibes. This is a common thing.

Connor: Moral intuitions are often antimemes. Things about various human nature or truth about yourself. Psychologists, don’t tell you, “Oh, you’re fucked up, bro. Do this.” That doesn’t work because it’s an antimeme for long. People have protection, they have ego. You have all these mechanisms that will resist you learning certain things. Humans are very good at resisting learning things that make themselves look bad. So things that hurt your own ego are generally antimemes. So I think a lot of what Eliezer does and a lot of his value as a thinker is that he is able, through however the hell his brain works, to notice and comprehend a lot of antimemes that are very hard for other people to understand.

Connor: I don’t think Eliezer is the greatest mathematician or the greatest scientist or the greatest whatever. And I think he would agree with that, that he’s not the greatest mathematician or anything like that. I think his value is in that for whatever reason, the way his brain works is de-correlated in certain ways from how most people’s brains work. And it allows him to pick up certain antimemes, which are valuable. And I think a lot of his frustration throughout the years, which he expresses very strongly in a lot of his writing, is because he’s been trying to explain antimemes to people, and it’s really fucking hard. If you read the Sequences, there’s a lot of obvious, “Okay, here’s how this thing works. Here’s how this thing works.” But so much of it is almost more like religious texts. Not in that it’s worshipful, but in that it’s metaphorical, it’s not supposed to be taking literal. You’re supposed to hear a story and then update on the vibe. You’re supposed to generalize from this scenario to other scenarios to learn antimemes.

Connor: And I think for example, with the Against Biological Anchors thing, what he was trying to do was to convey an antimeme, not to convey a prediction. Paul thought Eliezer is trying to weasel out of her prediction because he doesn’t have one. ⬆

Death With Dignity

Connor: Eliezer is trying to say, I have a valuable antimeme that people should understand and I’m trying to communicate it to you, but I’m failing because it’s hard to communicate. And this brings us to the Death with Dignity post. So the Death with Dignity post, you might say, “Connor, this sounds insane. What the fuck are you talking about? Antimemes? That seems ridiculous. I can’t think of a single example of an antimeme.” That’s the point.

Connor: But that aside, I have a perfect example. A real life living antimeme in full daylight for you to look at. And that antimeme is the Death with Dignity post. The Death with Dignity post is, in my opinion, one of the most perfect examples of an antimeme in broad daylight. So let’s talk about the Death with Dignity post. The Death with Dignity post is a very controversial post written by Eliezer where he kind of has his doomer mentality or whatever. He is like, “MIRI is shifting away from saving the world to, let’s die with as much of dignity as possible.” I mean, people say this is a troll post.

Michaël: It was posted on April 1st.

Connor: It was April 2nd, I think.

Michaël: Probably, he did it on April 2nd.

Connor: Yeah, something like that. I don’t know. So you’re like, “Oh, it’s April fool’s. Ha-ha, this is just a joke.” But no, really. It was a joke, but wasn’t really a joke. So my intuition, it’s not a joke, it’s an antimeme. So a great way to convey antimemes is through jokes. Comedy is one of the most effective ways to convey antimemes, especially social antimemes, things outside of the overtone window. One of the class antimemes is things outside of the overtone window. Humans will naturally resist just hearing and knowing things outside of the overtone window. It’s a natural way to create antimemes. And the what is so fascinating to me about that post, when I first read the post, my reaction, I think, was quite different from many other people in the EA and rationalist world.

Connor: My reaction was like, “Oh yeah, wasn’t that always the plan?” So I was just like, “I mean, yeah. Okay. Yeah, of course.” And I’ll explain why I had this reaction in a second.

Michaël: Maybe can you just summarize the main point?

Connor: I’m going to get to that. I’m going to get to that. So the surface level is that he says, “Look, everything’s so fucked. We can’t save the world, we should just try to die with the most dignity as possible, right.” And then he said like, “Instead of trying to focus on saving the world, we should focus on just dying with more dignity or whatever.” So that’s the surface level of that. That’s the non antimeme, that’s the packaging. The packaging is, “Okay, we should. Oh, we’re so fucked, I’m so depressed. Blah, blah, blah.” That’s the packaging. But that’s not the anti meme.

Connor: The antimeme is in a very, very hidden spot. The third paragraph. I’m joking, but I’m not. So why I love this post so much as an example of an antimeme is that he literally spells out the antimeme. Literally word for word, spells out what the antimeme is. And then the top rated comment completely misses it. It’s actually amazing. ⬆

Consequentialism Is Hard

Connor: So the antimeme in the post is that, so the surface aesthetic, the first two paragraphs or something are this, “Oh, we’re all fucked. Let’s all be depressed.” And then in the third or fourth paragraph or whatever, whatever the exact paragraph is, he explains what he actually means by this.

Connor: And what he means by this, is that gives his example. There’s a bunch of people in the rationalist movement and elsewhere who hear about the AI alignment problem. They hear like, “Oh well, things are so dangerous and we’re all going to die, blah, blah, blah.” And then they’re like, “Well, okay, we should blow up Nvidia. That’s a reasonable thing to do, right?” And like, “No, that is not a reasonable thing to do. You goddamn idiots, holy shit.” And the fascinating thing about this, so I have heard people seriously making this. So people are like, no one actually thinks this way. “Oh, there are people that think this way.” And I always have to tell them, so they’re like, “Well, Connor, if you are so pessimistic, why aren’t you blowing up TSMC?” And I’m like, “Oh my God, how can I explain to these people why this is stupid?”

Connor: The fact that you came to the conclusion that this was ever a good idea already shows that there’s something wrong with your thinking. So Eliezer diagnoses this thing the similar way to I do, is that utilitarianism and consequentialism is hard. Consequentialism is fundamentally hard. Consequentialism is the reasoning from consequences. Whatever makes the best consequences, that’s what you should do. This is fundamentally computationally hard. It’s different, for example, deontology. Deontology is just, you have certain rules and you just follow those rules. It’s kind of the difference between, P and NP in computational complexity theory. Evaluating your rules is always a constant number of steps, right. You just kind of, “Look at the world.” I have my like golden rule or whatever. I evaluate the rule and then I do that.

Connor: Consequentialism is fundamentally like NP. It’s much harder. I have to actually simulate all the outcomes of my possible actions and then pick the best ones. And this is arbitrarily hard. So if you are an ideal reasoner with infinite big brain energy, right, then of course consequentialism is the correct way to reason. Of course, it is, it’s obvious. But here’s the antimeme. So what happens is a lot of people, so humans are, by default, deontologists. Consequentialism is kind of unnatural to humans. Most people are deontological. And some people, some usually smart people, usually rationalist type people, hear about this great idea, utilitarianism, consequentialism. And they’re like, “Wow, this is obviously better. So I’m going to be a consequentialist now.” And on paper, that sounds great. But you can’t be a perfect consequentialist because it’s too computationally hard. So you’re always going to be an approximate consequentialist and that’s where everything breaks. ⬆

Second Order Consequences

Connor: So the diagnosis of why these people think blowing up TSMC is in any remotely way, a good idea that would not make everything literally worse, which it would, to be very clear is because they basically do a one step reasoning in a step. They’ll be like, “AGI bad. AGI need a GPU. Make GPU go away. Good. I did a consequentialism.” And I’m like, “If you’re not capable of doing one more step of thinking of the second order consequences of how this would destroy all cooperation possibility, this would destroy all goodwill. This would get militaries involved, this would get governments making things secret. This would make everything so much worse.” If you’re not capable doing this, maybe you shouldn’t be a consequentialist. And that’s the antimeme.

Connor: The antimeme is that what he’s trying to say is the deontological heuristic of try to do whatever maximizes dignity is much easier to compute. And his argument is if you already failed this first test, this shittier rule will make you more rational, will get you better outputs than you trying to use your broken consequentialism. Don’t be a consequentialist. Just take the heuristic, which is right 90% of the time, because otherwise you’re only going to be right 50% of the time. So it’s better for you to take the simpler heuristic of just taking this dying with dignity as a heuristic for generating your actions and you’re going to do better.

Connor: And then we have the top comment in that post from my good friend, AI_WAIFU, that’s his name, who I know quite well. He’s a great guy. He’s a great guy. He’s a very, very, very smart guy. I’ve known him for a while. And first, “Fuck that shit. Oh, I’m going to save the world. Oh, screw you. This is all terrible.” And I’m like, “Oh my God, this is an antimeme in action.” And I asked him afterwards, “Did you not read what the post said?” He’s like, “Oh no, I read the whole thing.” And I’m like, “Oh my God, this is an antimeme.” Everyone just did not see three-quarters of the post. It was just deleted from their memory. It was just amazing.