David Krueger On Academic Alignment

David Krueger is an assistant professor at the University of Cambridge and got his PhD from Mila. His research group focuses on aligning deep learning systems, but he is also interested in governance and global coordination. He is famous in Cambridge for not having an AI alignment research agenda per se, and instead he tries to enable his seven PhD students to drive their own research.

(Our conversation is ~3h long, you can click on any sub-topic of your liking in the outline below and then come back to the outline by clicking on the green arrow ⬆)

Contents

- Incentivized Behaviors and Takeoff Speeds

- Social Manipulation, Cyber Warfare and Total War

- Instrumental Goals, Deception And Power Seeking Behaviors Will Be Incentivized

- Long Term Planning Is Downstream From Automation From AI

- An AI Could Unleash Its Potential To Rapidly Learn By Disembugating Between Worlds By Going For a Walk

- Could Large Language Models Build A Good Enough Model Of The World For Common-Sense Reasoning

- Language Models Have Incoherent Causal Models Until They Know In Which World They Are

- Building Models That Understand Causality

- Finetuning A Model To Have It Learn The Right Causal Model Of The World

- Learning Causality From The Book Of Why

- Out Of Distribution Generalization Via Risk Extrapolation

- You Cannot Infer The Right Distribution From The Full Support And Infinite Data

- You Might Not Be Able To Train On All Of Youtube Because Of Hidden Confounders

- Towards Increasing Causality Understanding In The Community

- Causal Confusion In A POMDP

- Agents, Decision Theory

- Causality In Language Models

- Recursive Self Improvement, Bitter Lesson And Alignment

- Recursive Self Improvement By Cobbling Together Different Pieces

- Meta-learning In Foundational Models

- The Bitter Lesson Does Not Address Alignment

- We Need Strong Guarantees Before Running A System That Poses An Existential Threat

- How Mila Changed In The Past Ten Years

- How David Decided To Study AI In 2012

- How David Got Into Existential Safety

- Harm From Technology Development Might Get Worse With AI

- Coordination

- David’s Approach To Alignment Research

- The Difference Between Makers And Breakes, Or Why David Does Not Have An Agenda

- Deference In The Existential Safety Community

- How David Approaches Research In His Lab

- Testing Intuitions By Formalizing Them As Research Problems To Be Investigated

- Getting Evidence That Specific Paths Like Reward Modeling Are Going To Work

- On The Public Perception of AI Alignment And How To Change It

- Existential Risk Is Not Taken Seriously Because Of The Availability Heuristic

- How Mila Changed In The Past Ten Years

- Existential Safety Can’t Be Ensured Without High Level Of Coordination

- There Is Not A Lot Of Awareness Of Existential Safety In Academia

- Having People Care About Existential Safety Is Similar To Other Collective Action Problems

- The Problems Are Not From The Economic Systems, Those Are Abstract Concepts

- Coordination Is Neglected And There Are Many Low-Hanging Fruits

- Picking The Interest Of Machine Learning Researchers Through Grants And Formalized Problems To Work On

- People’s Motivations Are Complex And They Probably Just Want To Do Their Own Thing Most Of The Time

- You Should Assume That The Basic Level Of Outreach Is A Headline And A Picture Of A Terminator

- Outreach Is About Exposing People To The Problem In A Framing That Fits Them

- The Original Framing Of Superintelligence Would Simplify Too Much

- How The Perception Of David’s Arguments About Alignment Evolved Throughout The Years

- How Alignment And Existential Safety Are Slowly Entering The Mainstream

- Alignment Is Set To Grow Fast In The Near Future

- What A Solution To Existential Safety Might Look Like

- Latest Research From David Krueger’s Lab

- David’s Reply To The ‘Broken Neural Scaling Laws’ Criticism

- Finding The Optimal Number Of Breaks Of Your Scaling Laws

- Unifying Grokking And Double Descent

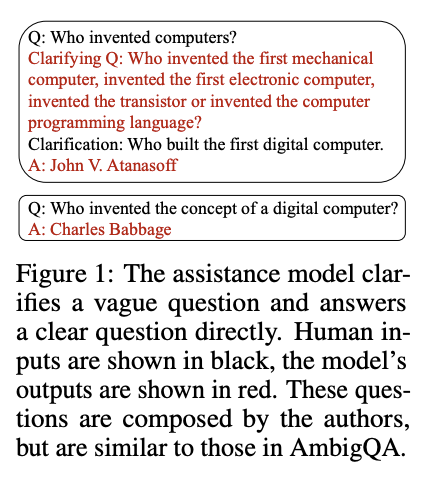

- Assistance With Large Language Models

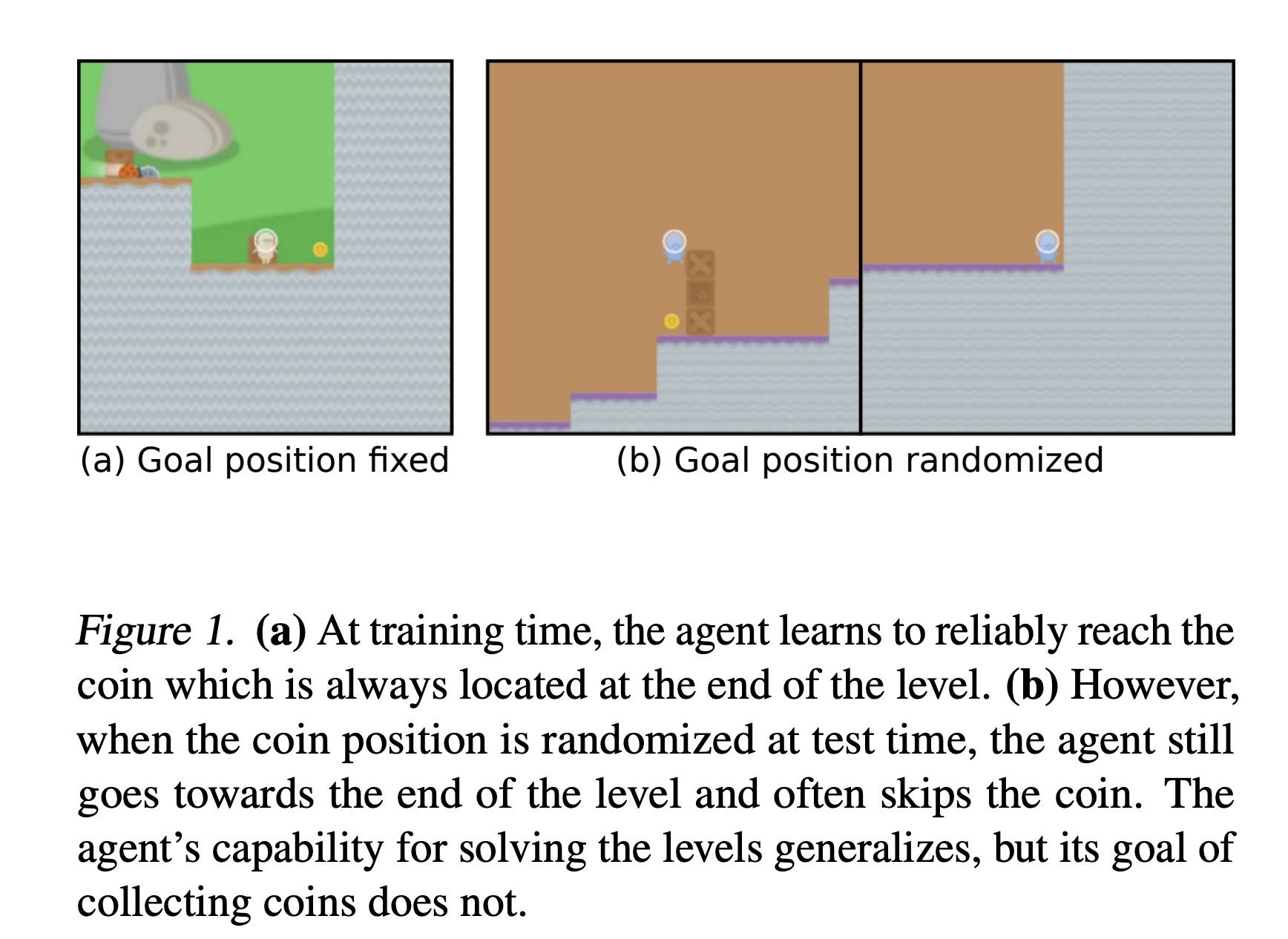

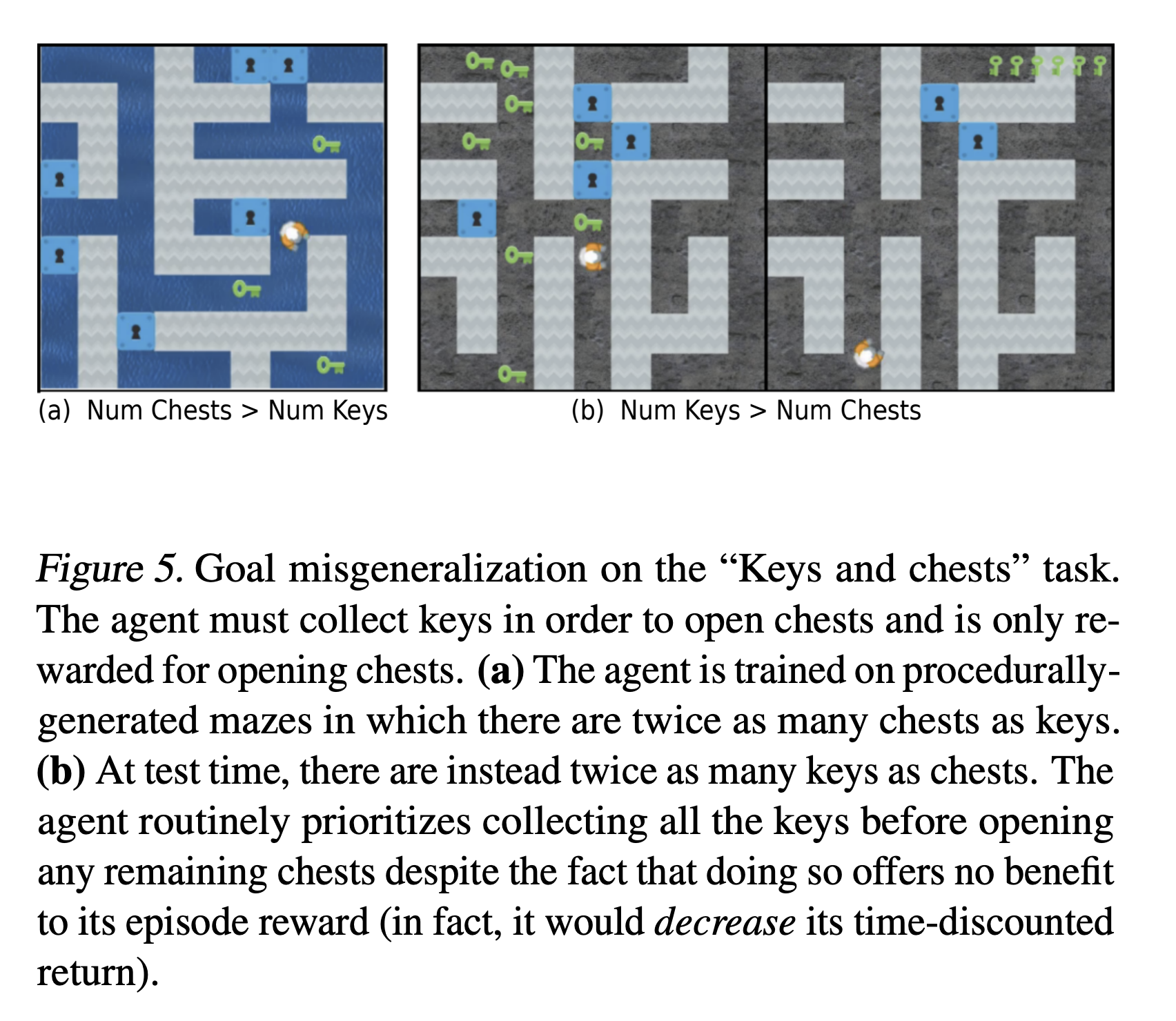

- Goal Misgeneralization in Deep Reinforcement Learning

- The Key Treasure Chest Experiment, An RL Generalization Failure

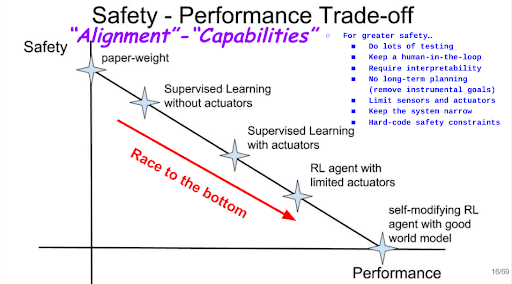

- Safety-Performance Trade-Offs

- Most Of The Risk Comes From Safety Performance Trade-Offs in Developement And Deployment

- More Testing, Humans In The Loop And Saving Throws From Hacks

- Would A Human-Level Model Escape With 10^9 years

- How To Limit The Planning Ability Of Agents: A Concrete Example Of A Safety-Performance Trade-Off

- Trainings Models That Do Not Reason About Humans: Another Safety-Performance Tradef-off

- Defining And Characterizing Reward Hacking

- Looking Forward

Incentivized Behaviors and Takeoff Speeds

Social Manipulation, Cyber Warfare and Total War

Michaël: What is the most realistic scenario you have for existential risk from AI?

David: I don’t have any realistic scenarios. It’s not about particular scenarios in my mind, it’s the high level arguments. But, I mean, you can think about it happening through social manipulation and just being able to trick people and getting people to take the actions in the world that you need to in order to seize control. Or you can think about it becomes really easy to actually solve robotics and build robots and we’re doing that.

David: And maybe that’s just because we’ve solved it, maybe that’s because once AI gets smart enough it can just do the AI research or the robotics research necessary to build robots that actually work. Some scenarios that I think people maybe don’t think about quite enough would be geopolitical conflicts that, in the worst case, maybe end up being military conflicts or total war. But even falling short of that, you can have security conflicts and geopolitical conflicts that are being waged in the domain of cyber warfare and information warfare. And I think in normal times, times of not total war, militaries tend to be somewhat conservative about wanting to make sure that the things that they deploy stay under their control. That has partially to do with the amount of scrutiny that they’re subject to. But if you think about cyber warfare and information warfare, I think a lot of that is just really hard to even trace and figure out who’s doing what. And there’s not such an obvious threat there of people dying or things happening in a really direct, obviously attributable media firestorm kind of way. ⬆

Instrumental Goals, Deception And Power Seeking Behaviors Will Be Incentivized

Michaël: But if they’re cyber attacks then you could take control of news or something like this, right?

David: Again, I don’t know what the details are here. What I’m postulating is situations where you have out of control competition between different actors. They really need to sacrifice a lot of safety in order to win those competitions to get a system that’s capable and strong and performant enough that it can accomplish its goals. And I think when you have that situation, at some point, the best way of doing that is going to build things that are agentic, that look like agents. And they don’t have to be perfect homo economicus rational agents. They might be less rational than humans or more rational than humans. But the point is, they’d be reasoning about how their actions affect the world over a somewhat long time span. And for that reason they would be prone to coming up with plans that involve instrumental goal, power-seeking behavior, potentially deception, these sorts of things that are, in my mind, part of the heart of the concern about x-risk from out of control AI is that the power seeking stuff… ⬆

Long Term Planning Is Downstream From Automation From AI

Michaël: I think one of the main concern people have with those scenario is that you would never want to deploy an agent that can reason over a month. All the agents we deploy right now, maybe think for few time steps for maximum, like a day but not max-optimal strategy for a month and manipulate humans for longer time horizons.

David: Yeah I mean. I don’t know. A day seems long to me. That’s the present we’re talking about. I’m saying, in the future, once you have AI that can do something better than people, and ok I have to caveat what I mean by better and we’ll get to that in a second, there then there are massive pressures to replace the people with the AI. At some point, we’ll have, I believe, AI that’s as smart as people and has the same broad range of capabilities as people or at least we’ll have the ability to build that thing. Things that are smarter and can do more things than people. That means that they’ll be able to do long-term thinking and planning better than people. And so, then it’ll be this question of why would we have a human run this company or make these plans when we can have an AI do it better? And the better part is, it might not actually be better. It’ll be better according to some operationalization of better, which is probably going to be somewhat driven by proxy metrics and somewhat driven by relatively short term thinking. On the order of next quarter rather than the coming millennia and all the future generations and all that stuff.

Michaël: Maximum profits for the quarter, maybe taking some outrageous risk that could end up with your token being devalued too much?

David: Yeah. ⬆

An AI Could Unleash Its Potential To Rapidly Learn By Disembugating Between Worlds By Going For a Walk

Michaël: Most people when they think about AGI, they would consider something like a CEO running a company or taking high level decisions. When we reach the level of an AI being able to replace a CEO or replace the decisions of CEO I think we kind of bridge the general level that people care about. I don’t really see a world where you can replace the decisions of someone without things already being very, very crazy.

David: I think the CEO thing… not necessarily, right? CEOs aren’t fully general necessarily. You might say that they are incapable of other things that people need to do. But this question of do things get really crazy, I think probably to some extent, but it’s hard to know. This brings us to another scenario I want to mention for how this could happen. Let’s say that things are going relatively well in the AI governance space. And so, we have some norms or rules or the practices that people are not just building the most agentic systems that they can and releasing them into the wild without any thought or consequences. The practices people are doing more what people have been doing historically and are doing with AI, which is trying to build relatively well scoped tools and deploying those. And these tools might be fairly specialized in terms of the data they’re trained on, the sensors and actuators that they have access to. And I forgot what you asked and how this connects but I’ll just keep going on this story. You could have something that actually is very intelligent in some way, has a lot of potential to rapidly learn from new data or maybe has a lot of concepts, a lot of different possible models for how the world could work. It’s not able to disambiguate because it hasn’t had access to the data that can disambiguate those. And in some sense, you can say it’s in a box. It’s maybe in the text interface box or maybe it’s in the household robot box and it’s never been outside the house and it doesn’t know what’s outside the house and these sorts of things.

Michaël: Like an Oracle with limited data or information about the world?

David: It doesn’t have to be an Oracle but just some AI system that is doing something narrow and really only understands that domain. And then it can get out of the box either because it decides it wants to go out and explore or because somebody makes a mistake or somebody deliberately releases it. You can suddenly go from it didn’t really know anything about anything outside of the domain that it’s working in, to all of a sudden starts to get a bunch more information about that and then it could become much more intelligent very quickly for that reason. People when they think about this foom or fast takeoff scenario, in most people’s minds, this is just synonymous with recursive self-improvement where you have this AI that somehow comes up with some insight or we just put all the pieces together in the right way that it suddenly clicks and it’s able to improve itself really rapidly at mostly at the software level. I think a lot of people find that really implausible because they’re like, “Yeah, I don’t think that there are actually algorithms that are that much better than what we’re using maybe.” Or they just think it’s really hard to find those algorithms and it’ll just be making research progress at roughly the same rate that people are, which is fast but it’s not overnight, like “we go from AGI to superintelligence fast”.

Michaël: Well, I guess our brains are limited in speed, but if we just had a program that can rewrite its own code and just optimize overnight, it would be ten to the nine faster than humans, right?

David: I don’t know. There’s a lot to say about the recursive self-improvement thing. I’m certainly not trying to dismiss it. I’m just saying it’s not the only way that you can get fast takeoff. You have this thing that was actually really smart already but it was kind of a savant and then it suddenly gains access to a lot more information or knowledge or more sensors and actuators.

Michaël: In your scenario of the household robot, let’s say we have a Tesla humanoid or something, this new robot. And it suddenly opens the door and runs into the street. But maybe everything the designers wanted is just optimized for the thing being good in the training data of the house. So I don’t really see how it’ll be good on the new data outside. Maybe it will just crash. But I can see it in on the Internet if you have something, maybe some Action Transformer that is trained on some normal text data to fill some forms and then it goes on Reddit, maybe the Reddit text data will be the same as the Airbnb form data or something. ⬆

Could Large Language Models Build A Good Enough Model Of The World For Common-Sense Reasoning

David: It’s certainly a kind of speculative thing. It’s something that I’m trying to address with my research right now, this question of, will we see this kind of behavior that I’m talking about where you have rapid learning or adaptation to radically new environments? One way that I’ve been thinking about it is this question of does GPT-3 or these other Large Language Models or any model that’s trained offline with data from limited modalities… is it building a model of the world? How close is it to building a model of the world, and a good one that actually includes things like the earth is this ball that goes around the sun and the solar system and the galaxy and here’s how physics works and all this stuff. Humans definitely have some model of the world that roughly includes things like this and it all fits together in obviously not entirely coherent but somewhat coherent way. And it’s really unclear if these models have that because we don’t really know how to probe the capabilities or understanding of the models in depth.

Michaël: Some people have tried for, I think, they tried asking common sense physics questions, maybe just multi-step, two or three steps and it worked pretty well.

David: I don’t know I’m not as up-to-date on all the research and foundation models as I’d like to be. But I haven’t seen anything super impressive in terms of common sense understanding of physical scenarios. I think certainly progress is being made but the last I saw, they still can struggle to manage a scenario where you describe several objects in a room and those objects being moved around by people that get confused by that stuff. But all of that is not necessarily revealing the true capabilities or understanding or world modeling ability of the model because we don’t know how to elicit that stuff. The results you get are always sensitive to how you prompt the model and things like that. You can never assume that the model is trying to do the task that you want it to do because what it’s trained to do is just predict text.

Michaël: You never know actually what the model wants, or sorry, what the model knows until you probe it the right way.

David: It’s a super hard open question. Can we even talk about the model having wants or having understanding and what does that mean? Because you can also say, “Well no, maybe it just has a bunch of incoherent and incompatible beliefs, just much more so than a human does.” Humans have this as well. But we can say humans, as I said earlier, have something coherent beliefs about what the world looks like, how it works, how it all fits together. ⬆

Language Models Have Incoherent Causal Models Until They Know In Which World They Are

David: Maybe Language Models just have nothing like that at all like that but maybe they do or maybe they have all these incoherent causal models. But they have a large suite of possible worlds that they’re maintaining a model of and something like a posterior distribution over or… it doesn’t necessarily have to be this vision thing. But then if they then get new data that disambiguate between these models, maybe they can rapidly say, “Oh yeah, I knew that there was one possible model of what the world was like.” That is basically the same model that you or I would have with all those nice parts. But I just didn’t know if that was the reality that I was in. And now all of a sudden it’s very clear that that’s the reality I’m in or at least the reality that I should be in. That’s the model that I should be using for this context and for the behavior right now.

Michaël: So the language model is living in some kind of quantum physics world and when it observes something it goes like “Oh yeah, I’m in this world now, this is what follows.”

David: Yeah.

Michaël: There was this Simulators post on LessWrong about how any language model can just simulate an agent inside him. I think in some way you can simulate any agent, some level of complexity but you’re not actually an agent, you’re just a simulator.

David: Then you can imagine that when you have this model that seems like it’s really dumb, doesn’t understand how to do this physics stuff and just can’t keep track of four different objects in a room. But then as soon as you actually plug it into a robot body and train it for a little bit on this kind of task, it’s just like, “Oh, I get it. I used to have this massive ambiguity over what world model I should use to make my predictions or my decisions but I’m very able to quickly update and understand the situation I’m in.” There’s no actual learning that needs to take place. It’s just more inference.

Michaël: If you could plug some language model or foundation model that has some very good world model and then you plug in some RL, maybe it’ll understand the world because you can use this agent simulation or just world modeling to interact with the world without having to learn a new model.

David: This is something that’s a really interesting research question and is really important for safety because people have very different intuitions about this. Some people have these stories where just through this carefully controlled text interaction, maybe we just ask this thing one yes or no question a day and that’s it. And that’s the only interaction it has with the world. But it’s going to look at the floating point errors on the hardware it’s running on. And it’s somehow going to become aware of that.

David: And from that it’s going to reverse engineer the entire outside world and figure out some plan to trick everybody and get out. And this is the thing that people talk about on LessWrong classically. We don’t know how smart the superintelligence is going to be, so let’s just assume it’s arbitrarily smart, basically. And obviously, a lot of people take issue with that. It’s not clear how representative that is of anybody’s actual beliefs but there are definitely people who have beliefs more towards that end where they think that AI systems are going to be able to understand a lot about the world, even from very limited information and maybe in very limited modality. My intuition is not that way. The important thing is to test the intuitions and actually try and figure out at what point can your AI system reverse engineer the world or at least reverse engineer a distribution of worlds or a set of worlds that includes the real world based on this really limited kind of data interaction. ⬆

Building Models That Understand Causality

Finetuning A Model To Have It Learn The Right Causal Model Of The World

Michaël: People doing research at your lab are trying to investigate those questions of how much you could generalize from one ability to another. Was that basically what you’re saying? You had some papers or some research on this?

David: It’s actually not anybody at my lab. No, that’s not true. There are two projects that I think are relevant to what we were just talking about. It was about this question of, “if you have a model that learns the wrong causal model of the world, the wrong mechanisms, can you finetune it to fix that problem?”

David: If you train something offline, you would often expect that it’s not going to learn the right causal model of the world because there are hidden confounders. Your data just doesn’t actually tell you how the world works and you just pick up on these correlations that aren’t actually causal. But then if you finetune it with some online data, let’s say you let it go out and interact with the world so it can actually perform interventions and see the effects of doing those actions or making those predictions, then that might fix its model and might quickly lead to having the right model of the world or the right causal model of the world. And what we found in this paper was if you just do naive fine tuning that doesn’t happen. But if you do another kind of finetuning, which we propose, then you can get that. I want to make clear that’s not the only reason to look at this question because the way I just described it sounds like it’s just capabilities research and there’s the scientific question of does it happen with normal fine tuning? But the method itself right now just sounds like, “Oh, that’s something that’s going to make it easier for these models to become capable and understand the world rapidly.”

David: The reason that a method like that might be useful and good for alignment is that it could help with misgeneralization. This ability to understand what the right features are or the right way of viewing the world is probably also critical toward getting something that actually understands what we want it to do. It’s very murky, which I think is often the case with thinking about how your research is relevant for safety.

Michaël: It both helps the AI better understand the instructions but at the same time maybe become more agentic and dangerous. ⬆

Learning Causality From The Book Of Why

Michaël: About causality, I remember in one of your talks, you said that you tried to feed ‘The Book of Why’ to model to see if it would understand causality and, of course, not because you need actual actions or input-output data to understand causality or you need to interact with the world.

David: I said some stuff like that. I talked about The Book of Why. I was trying to present these two competing intuitions and the research that I’m interested in doing is to try and resolve which of these intuitions is correct or get a clear picture. I wouldn’t say… of course not… I would say there’s in-theory argument for why it shouldn’t work, but that in-theory argument doesn’t say how badly it will fail. And it doesn’t necessarily say much about what’s actually going to happen, but I think it is still a compelling argument, one that most people aren’t aware of. ⬆

Out Of Distribution Generalization Via Risk Extrapolation

David: This was for one of my paper Out-of-Distribution Generalization via Risk Extrapolation, bit of a mouthful or REX is the method. And that’s the last paper I did during my PhD.

Michaël: With Ethan Caballero, right?

David: He’s the second author. We have this remark in the paper that’s basically just saying, “Oh yeah, by the way, you can’t expect to learn the correct cause model just from having infinite data and infinitely diverse data.” When you talk to people who are like Ethan, let’s say the scale maximalists, this is a really good argument saying “actually no, this just isn’t going to work.” And I’m not saying the argument is actually true in practice. I’m just saying it’s worth taking seriously. It turns out that what actually matters is the actual distribution of the data and not just having full support and infinitely much data. And that’s because of hidden confounders. ⬆

You Cannot Infer The Right Distribution From The Full Support And Infinite Data

Michaël: What’s the full support thing?

David: Oh, full support. I just mean you see every possible input-output pair, every example… you see every possible example infinitely many times. You’d think that you’re going to learn the right thing in that case. It makes sense intuitively. But it’s just not true because there are some examples where you see the same input with the label as zero and the same input with the label as one. And so it actually depends on the ratio of those two types of examples that you see. And you will get different ratios depending on what kind of data you have.

Michaël: So you’re saying if you have infinitely many examples of the right distribution, you won’t be able to fit a function to approximate it?

David: No. If it’s the right distribution, then you’re good. But my point is the distribution matters. So it’s not just the coverage, but it’s the actual distribution. ⬆

You Might Not Be Able To Train On All Of Youtube Because Of Hidden Confounders

Michaël: You mentioned Ethan Caballero, and one thing you mentioned a lot when I was in Montreal was training on all of YouTube. So if your distribution is all of YouTube, can you get some causal model of the world?

David: I mean, who knows, right? That’s an open question. I think.

Michaël: From the paper risk extrapolation, is-

David: That doesn’t address the argument there. No, I mean there are always hidden confounders-

Michaël: What’s a hidden confounder?

David: A hidden confounder is something that affects the relationship between the variables that you observe and that you don’t observe. There’s always stuff that we don’t observe. You can only observe a tiny, tiny fraction of what’s happening at any given moment. And everything is connected. That’s just physics. So they’re just always hidden confounders.

Michaël: So you’re just saying there are infinitely many, many variables in the world… that it is impossible to build a true casual model of the world?

David: It’s not necessarily the case that you’re going to build the best causal model that you can by just trying to fit your observed data as well as possible. So you should be thinking about these issues of hidden confounders and you should be trying to model that stuff as well.

Towards Increasing Causality Understanding In The Community

Michaël: Do you think people in the Deep Learning community should build more causal models?

David: I think people should try and understand causality to some extent have a basic understanding of causality. By and large people in the research community do say, at this point, that it is potentially a major issue for applying machine learning in practice. Because I think there’s a lot of hype and over enthusiasm for things that won’t really work in practice. And that partially has to do with causality. I think it’s a good angle on robustness problems. You can do some pretty irresponsible and harmful things if you just build models and they look like they’re working and you haven’t thought about the potential causal issues there. ⬆

Michaël: The problem if it works too well then you have an agent that is very capable and capable of manipulating you. But if it doesn’t work very well, then it’s just like you actually have a bad agent, not very capable.

David: We’ve sort of been mixing together understanding causality and being an agent, but those are totally separate things in principles. You can have a good causal model of the world and still just be doing prediction. You don’t have to be an agent. Historically people who have been working on causality and machine learning are working on prediction more than agency. People in reinforcement learning have been thinking about this in the online context where the agent gets to learn from interacting with the environment. And there’s this recent trend towards offline RL where instead you learn from data that has already been collected and that obviously has the same problems of supervised learning where it’s just more clear how causality and causal confusion can be an issue.

David: But historically, people have been focused on the online case and then you just figure, well, okay, agent’s just going to be able to take interventions and test its actions are basically interventions. And so it can actually learn the causal impact of its actions that way. So there’s no real issue of causality there. And I guess I’m not sure to what extent that is correct either.

Causal Confusion In A POMDP

David: Because we have an example, another one of my papers from towards the end of my PhD, Hidden Incentives for Auto-Induced Distributional Shift. One of the results there was, we were doing online RL using Q learning in a POMDP. And if you set it up you can get causal confusion still.

Michaël: So what’s causal confusion?

David: Causal confusion. I just mean the model basically has the wrong causal model of the world or gets some stuff backwards, thinks X causes Y when Y causes X or Z causes both X and Y, these sorts of mistakes.

Michaël: What kind of POMDP? Was it the thing with the prisoner’s dilemma?

David: It’s a POMDP that’s based on the prisoner’s dilemma. You can think of cooperate or defect as invest or consume. And so basically if you invest now, then you do better on the next time step. But if you consume now, you get more reward now. The point of this was just to see if agents are myopic or not. If they consume everything immediately, then they’re myopic and if they invest in their future then they’re non-myopic. This seems potentially a good way to test for power seeking and instrumental goals because instrumental goals are things that you do now so that in the future you can get more reward. The delayed gratification is an important part of that.

Michaël: Are agents today are able to plan and invest or are they more myopic or it depends on different cases?

David: That was the simplest environment we could come up with to test this. It’s really easy if you build a system that is supposed to be non-myopic, then it will be non-myopic generally. But the question was if you build a system that’s supposed to be myopic. If you set the discount parameter gamma to zero so that it should only care about the present reward, does it still end up behaving as if it cares about the future reward?

One of these speculative concerns is that even if you aren’t trying to build an agent, there’s some convergent pressure towards things becoming agents. You might be thinking that you’re just building a prediction model, like a supervised learning model that doesn’t think about how its actions affect the world, doesn’t care about it, but you’ll be surprised one day, you’ll wake up and the next day it’s a superintelligent agent that’s taking over the world and you’ll be like, “Darn, I knew we shouldn’t have trusted GPT-5.”

Michaël: What are those kind of pressures? Just users interacting with it?

David: It’s super speculative to some extent. People talk about decision theories and stuff like this, and I think I’m going to kind of butcher this, but I guess I want to say there’s this view from people like Eliezer and MIRI and LessWrong, you can’t build something that is an AGI with human level intelligence, unless it is an agent.

And so if you wake up one day and found that you have built AGI, it means that however you built it, you produced an agent. So even if you were just doing prediction, somehow that thing turned into an agent. And then the question is how could that happen? Why could that happen? And it gets pretty weird. But I guess you can say if you really believe that agency is the best way to do stuff, is to be an agent, then maybe the best way to solve any task is to be an agent. Even if it looks like it’s just a prediction task.

Agents, Decision Theory

Defining Agency

Michaël: What is being an agent?

David: I feel like I’m really going to butcher this right now. It means a bunch of different things to different people in this context. It’s the term of art on the online existential-safety community, LessWrong, or Alignment Forum community would be consequentialist, which is a term borrowed from philosophy. But basically it means that, alluded to earlier, you’re thinking about how your actions will affect the world and planning for the future, but it’s even a little bit more than that because in the limit it means you have… I don’t know, are as rational as possible. And maybe that includes doing things like acausal trade and these weird decision theories that say you shouldn’t just think about the causal effects of your actions. You should act as if you’re deciding for every system, agent, algorithm, whatever in your reference class. ⬆

Acausal Trade And Cooperating With Your Past Self

Michaël: This is something I’ve never understood – acausal trade. If you have a good two minutes explanation, that would be awesome.

David: I don’t think I’m the right person, but again let’s just go for it. I can explain it with reference to my research and then we can say how it might generalize. In my case, it’s this prisoner’s dilemma environment. If you reason as if you are deciding for every copy of yourself, including the copy on the last time step. In this POMDP inspired by the prisoner’s dilemma in this work that we were talking about, if you think about yourself as deciding for all the agents in your reference class, that might include the version of you on the previous time step. And then you might say, “Well if I cooperate then it probably means I cooperated on the last time step, which means I’ll probably get to pay off of cooperate-cooperate and if I defect I probably just defect it. So I’ll get like defect-defect and cooperate-cooperate is better than defect-defect so maybe I should cooperate.”

David: Whereas normally the more obvious way of thinking about this so is to say, “Look, whatever I did is already done so I’m just going to defect because that helps me right now.” And these roughly map onto the first one, Evidential Decision Theory and the second one, Causal Decision Theory and the acausal trade falls out of these decision theories, like Functional Decision Theory which sometimes act more like EDT and sometimes act more like CDT. And you can now say “Okay, so that’s what I would do in this case. And then you can say, well what about agents in other parts of the multiverse who are like me? And maybe if I want to cooperate with those agents that exist in some other universe..” And that’s the part that I think I’m not going to do a good job of explaining. So let’s just say by analogy cooperating with your past self is cooperating with a copy of yourself in another universe or something.

Michaël: So why would you cooperate with your past self?

David: It’s not really cooperating with your past self here, it’s cooperating with your past and your future self in a way. Because your past self isn’t the one who gets the benefits of you cooperating, it’s your future self who gets the benefits of you cooperating or investing.

Michaël: So it’s kind of committing to a strategy of always investing or cooperating so that all the copies of yourself do the right thing.

David: But the way that you put it makes it sound like I’m going to commit to doing this from now on so that in the future I can benefit from it.

Michaël: Are the agents trying to maximize reward over time? You were talking about some gamma that was set to zero is the kind of gamma you have in RL where it can be 0.9 or one and the more close to one, the more to it’s able to think about the long-term. And so in the case of something cooperating with this past self or future yourself as gamma closer to one or non-zero?

David: All the experiments in this paper we had gamma zero.

Michaël: And even with gamma zero it tried to cooperate?

David: Well it did “cooperate,” right? There’s two actions and it took the one that corresponds to cooperating in the prisoner’s dilemma in some settings.

Michaël: Because it maximizes reward for one step or…

David: It’s kind of complicated to get into that because the question of what counts as maximizing reward kind of depends on your decision theory and stuff like this. At any point if you defect you’ll get more reward than if you cooperate from a causal point of view. But cooperate-cooperate is better than defect-defect and for some algorithms you end up seeing mostly defect-defect or cooperate-cooperate payoffs and cooperating ends up being really good evidence that you cooperated on the last time step. Good evidence that you’ll get the cooperate-cooperate payoffs and vice versa for defect. So it kind of depends on if you’re doing causal or evidential reasoning.

Michaël: I won’t go deep into all the decision theories because I haven’t read all of them. Do you remember why were we talking about agents? ⬆

Causality In Language Models

Causality Could Be Downstream From Next Token Prediction

David: We were talking earlier about this argument about the Book of Why and I said there’s this argument from my paper on risk extrapolation saying, “If you train your foundation model offline, it’s probably going to be causally confused.” And I think that’s a good argument, but I don’t think it’s conclusive and I think there’s good counterarguments. The one that I focus on is basically, “If your language model reads the Book of Why or another text on causality and is able to do a good job of predicting that text, then it seems like it must have some understanding of the content of the text. So it must understand causality in some sense.”

David: This goes back to this question we were talking about, “is the model going to build a good model of the world that is accurate?”. In this case this is the argument for why just training on text means that you need to learn a lot of things about how the world actually works because a lot of the text that you’re going to predict… the best way to predict that is to have a good model of the world. Then that’s an argument for saying actually it is going to understand causality. I think it’s pretty unclear how this all shakes out and it’s really interesting to look at it. We have another project that’s about that saying… ⬆

Models Might Be Leveraging Causal Understanding Only When Prompted The Right Way

David: There’s this question of… if it understands it, is it then going to actually use it to reason about things? It could have this understanding of causality or physics or what the actual world that we live in looks like and have that in a little box over here that it only ever uses that box when it’s in a context that is very specifically about that. So it only ever taps into the fact that the earth is a third planet from the sun when people are asking you questions about that thing, about the solar system and otherwise it’s not paying any attention to that part of its model or whatever.

David: But you might say, actually, it should be possible for the model to recognize which types of sources provide good, very general information that is about things like how the world works and to try and use that even outside of those contexts. That’s the question here is if you assume that the model is going to learn these things, is it actually going to reason with them across the board or is it only going to tap into them in very particular contexts? Is it going to reason constantly, even when it’s not looking at text about causality or is it only going to tap into that understanding when it’s predicting text from the Book of Why?

Michaël: The question is, will the model only understand causality when trying to fit some causal text?

David: Not will it only understand it, but will it only leverage that understanding or be using that part of its model.

Michaël: If we don’t prompt it with the Book of Why it will not be able to use the part of this model that is about causality.

David: Or, I would put it more, it will choose not to. But its ability versus motivation is something that we don’t really know how to disentangle rigorously in these models. Even if the models don’t do the causally appropriate stuff by themselves, it doesn’t mean that this is a fundamental barrier. In some sense it is. I think I tend to agree with a lot of the criticisms that people make of just scaling up Deep Learning and the limitations of it. And I’m like, “Yeah, those are limitations, but they don’t matter that much because we can get around them.” So if your model can do causely correct reasoning when prompted in the right way, then you just have to figure out how to get it to do that reasoning at the right time. I think that’s not that hard of a problem a lot of time, probably. ⬆

Recursive Self Improvement, Bitter Lesson And Alignment

Recursive Self Improvement By Cobbling Together Different Pieces

David: Similarly things about the model not having long-term memory or just all the other limitations that people have. Systematic generalization, it can’t robustly learn logical stuff and do deduction. We have existing frameworks and algorithms for doing deduction and doing reasoning and I think all the model needs to do is learn how to interact with those things or we can learn another system that puts all these pieces together.

David: That’s what I think is actually the most likely path to AGI, it’s not just scaling but somehow cobbling together all these different pieces in the right way. And that might be done using machine learning. That might not be something that people do. That might be something that in the end we just do a big search over all the different ways of combining all the ideas that we’ve come up with in AI and the search =stumbles across the right thing and then you might very suddenly have something that is actually has these broad capabilities.

Michaël: So we do some Neural Architecture Search but with different model that could interface with each other and at the end we get something that has one causal model, one language model and they all piece together to, I don’t know, use their superpowers or something together.

David: That’s roughly the right picture. Neural Architecture Search is a pretty specific technique, but it’s that thing. Meta-Learning, Neural Architecture Search, Automatic Hyperparameter Tuning are examples of things that are like this, but I think they’re all fairly narrow and weak compared to the things that machine learning researchers and engineers do. So if we can automate that even in a way that maybe isn’t as good as people but is reasonably good, at least is better than just random search and then we can do it faster than people can, then that could lead to something that looks like recursive self improvement but probably still not overnight. ⬆

Meta-learning In Foundational Models

Michaël: Is there any evidence for that? Because I feel like the trend in the past few years have been to just have Foundation Models that are larger, that can use different modality and not gluing together different models or searching over the space of how to interface two different models. Any paper or research in that direction?

David: One thing I would say is a lot of this stuff planning is being done with foundation models these days and it’s working quite well, but we’re explicitly inducing that planning behavior somehow. We’re building an architecture or we’re running the model in a way that looks like planning. We’re not just doing it purely end to end, we’re prompting it to make plans or we’re building architectures that involve planning at the architectural level, or we’re doing things like Monte Carlo research or some other test time algorithm that combines multiple predictions from the model in some clever way.

David: What I’m saying I think does go against current trends. From that point of view, there’s maybe some validity to critics who are like, “Oh, people are focusing too much on scaling”. From the point of view of just making AGI as fast as possible, which is not really what I think the goal should be, obviously. You do also see people using foundation models to do things like Meta-Learning, basically. Training a foundation model to take as input a dataset and output a predictor on that dataset.

Michaël: Wait, it just outputs a model?

David: I’m not sure if it outputs a model or if you take a data set and a new input that you want to make a prediction on and then you produce the output for that particular input.

Michaël: So it’s kind of simulating another model.

David: Basically trying to do all of the learning in a single forward pass, which is also an idea that Richard Turner, one of my colleagues at CBL has been doing for years, but now with foundation models but using neural processes as the line of work. So I think there is an ongoing effort to automate learning, research and engineering and it still hasn’t paid off in a big way yet, but I think it’s bound to at some point because everything’s going to pay off at some point because AGI… ⬆

The Bitter Lesson Does Not Address Alignment

Michaël: Are you on the side of the Bitter Lesson where any engineering trick or small formula will end up irrelevant with scale?

David: I don’t think that’s quite what the lesson says.

Michaël: It’s more about Meta-Learning than actually just scaling. This is more in RL, the methods that scale with compute or data or the meta-learning approaches are better than just engineered ones, right?

David: I don’t feel like the Bitter Lesson is being about meta-learning at all, but it’s just about learning. Learning, planning, search, two of those three, I think it’s learning and search that are the ones that he focuses on as being the things that scale. And I think this is true basically, and it’s one of the reasons in my mind to be worried about existential safety is because whether or not just scaling up Deep Learning leads to AGI, there are definitely large returns to just scaling up compute and data, but that doesn’t really deal with the alignment problem per se.

David: It might end up in some way. This is an argument that I’m trying to flesh out right now and I think it might just be kind of wrong or misguided in some sense, but intuitively it seems right to me. And the argument is as you get more and more complex systems, it becomes harder and harder to understand and control them just because they’re complicated and there are more different things that they could do. So you need to provide more bits to disambiguate between all the many things that they could do to get the things that you actually want them to do. And they’re just more complex. So it takes more labor to understand them if you want to really understand all the details.

Michaël: So the more complex the model is, the more work a human needs to do to actually determine if the model is aligned or not. If there’s one aligned model among two to the power of ten, you need more time or more feedback or more interpretability tools.

David: That’s the claim, which I think empirically it looks like this is just maybe wrong, it’s kind of unclear because people have found that as you scale up models, they may get easier to align in some sense. It seems like they understand better what we’re asking for and stuff like this. So I think that’s the part that I’m currently stuck on is how to deal with, because I think right now a lot of people won’t find this convincing, even though my intuition still says no, there’s something to this argument.

David: Another way of putting it I think, which also will certainly not land for everybody, but is in terms of this distinction between descriptive and normative information or facts. I think, the Bitter Lesson applies to descriptive things. Basically facts about how the world is, but it doesn’t apply to normative things, which is what is good or what we want. So learning and search and scaling don’t really address that part of how do we get all the information in the system about what we want. ⬆

We Need Strong Guarantees Before Running A System That Poses An Existential Threat

Michaël: So scale is not all you need when you need to do alignment?

David: Yeah. Another part of the argument is maybe it is, but we won’t really know. You can just hope that something works and just go for it. But I think what we should be aiming for is actually understanding and not taking that kind of gamble. There’s this distinction between a system being existentially safe and us knowing that that’s the case and having justified confidence in it. So not just thinking it’s the case or having convinced ourselves, but actually having a sound reason for believing that.

Michaël: So even if it would be existentially safe in 90% of the cases, we would need some kind of strong reason to believe that and be sure before running it. And even if there’s a high chance of it being safe, we wouldn’t have the tools to be sure it’s safe.

David: If you run this system, it’s either going to take over the world and kill everyone or not, and that’s pretty much deterministic, but you don’t know which of those outcomes is going to occur. You might say, “I have essentially no reason to believe that that’s going to happen except for some general concern and paranoia about systems that seem really smart being potentially dangerous.” From the point of view of existential safety, when we’re talking about potentially wiping out all of humanity, it seems like the bar should be really high in terms of the amount of confidence you would like us to have before we turn on a system that we have some reason to believe could destroy humanity.

Michaël: How high?

David: I don’t know.

Michaël: How many nines?

David: That’s a good question. I guess it depends on how longtermist you are, but I think we can say definitely a lot because the amount of harm that we’re talking about is at least killing everyone alive right now. And then if you value future people to some extent, then that starts to grow more. ⬆

Michaël: We’ve been bouncing around this notion of alignment or getting models to do what we want. Do you have any more precise definition? Because I’ve tried to talk to Mila people about it and it seemed like even if you were there for a few years, people didn’t know exactly what alignment was. ⬆

How Mila Changed In The Past Ten Years

David: I mean I was there for almost ten years and the lab grew a ton from something like fifty people, something like a thousand over the course of time when I was there.

Michaël: How was the difference between fifty to a thousand?

David: I mean, it is totally different. And then the last couple years there was pandemic, so I was already starting to lose touch I would say. Also when we moved to our new building, I think people weren’t coming into the lab as much and it changed the culture in various ways.

Michaël: How was the beginning when you were only fifty people?

David: I mean, it was cool. I don’t know. Can you give me a more specific question please?

Michaël: Would you cooperate or collaborate with mostly everyone and know what everyone was doing?

David: Not per se, but yeah, definitely more like that. I guess at the beginning I would say it was much more Yoshua’s group and everyone who to leading the vision for the group to a large extent. And everyone was doing Deep Learning and Deep Learning was this small niche fringe thing. So it was still… you could be up to date on all of the Deep Learning papers, which is just laughable now. ⬆

How David Decided To Study AI In 2012

Michaël: I think 10 years ago there was a story of Ian Goodfellow reading all the Deep Learning papers on Arxiv. This was 2012. So it was after AlexNet?

David: AlexNet and Ilya Sutskever’s Text Generating RNN were the things that got me into the field. I was watching these Geoff Hinton Coursera lectures and he showed those things and this was the first thing that I saw that looked like it had the potential to scale to AGI in some sense. And so I was already basically convinced when I saw those that, that this was going to be a big deal and I wanted to get involved. So then I applied in 2012 for grad school and started in 2013.

Michaël: But you needed to be at least in the field of AI to see those signals, right? Because most people came maybe in 2015 or 2016.

David: I mean wasn’t in the field of AI.

Michaël: So as someone outside, how did you saw it as very important?

David: I studied math and knew was trying to get a lot of different perspectives on basically intelligence and society and social organization and these sorts of things during my studies before that. And I was always interested in AI, but when I started college, I didn’t even know it was a field of research. I was definitely learning pretty slowly what it was and what was out there. I think I heard about machine learning when I was in my second or third year of college. And then I went and looked at maybe something, I don’t know if it was Andrew Yang’s Machine Learning course, but it was something that resembled that. There you see linear regression, logistic regression kernel methods, nearest neighbors. I was just like, “Well, this is crap. This is just not going to scale to AI maybe in over a hundred years if you just scale these things up with orders and orders of magnitude more compute. But it just seemed clearly not anything you should be calling AI.” And then I also heard about Deep Learning and Artificial Neural Networks in between my second and third year when I was doing research in computational neuroscience. Somebody drew a neural network and was like, “you train this with gradient descent based on the reward signal.” And I was like, “wow, that is so cool. This looks really, this is like the this is the right level of abstraction for people to try to understand and solve intelligence. Because the other stuff there was modeling individual neurons at the level of physics and stuff like this. And it’s just like this is never going to go anywhere anytime soon.”

Michaël: So just the abstraction was good and you had some basic understanding of linear regression. You saw the AlexNet paper and you were like, “Oh, this thing actually scales with more layers.”

David: When I saw that, I was really excited by it, but they said this doesn’t work and it’s sbeen disproven as an approach and I was sad. And so then I was like, “Oh there’s this course on neural networks on Coursera” and there were like then different courses that I had followed or whatever, but hadn’t really watched anything. And I just decided to binge watch that one on a whim. And I was like, “holy shit, they lied to me. It does work.”

David: So it was both, I had this intuition already that this was a promising approach to solving AI and then just seeing the text generation was artificial creativity of some sort. And then seeing that it could deal with what’s clearly a complex thing, which is vision and was just almost out of the box doing just a huge step improvement. You were seeing these other methods leveling off and then it was just like shoom! I think Geoff Hinton made a bunch of good arguments as well in his lectures about the scalability of the methods and about things like the curse of dimensionality. So from first principles, why you need to be doing Deep Learning or something like it somewhere in your AI system. ⬆

How David Got Into Existential Safety

Michaël: And to fast forward a little bit, you go to Mila, you spend 10 years there and nobody knows about alignment.

David: I mean it is a little bit puzzling to me, I guess. In the early days I was talking to everybody who I could to some extent about this. For the first couple years I was expecting that I would get to Mila and everyone would be like, “oh yeah, we know about that stuff and you don’t have to worry about it because X, Y, Z.” Have really good reasons. And turns out people didn’t know about it. So then, I started trying to talk to people about it a lot, but I was like an outsider, hadn’t really done programming before and stuff when I got there. And I really felt like a little bit intimidated and just impressed by the people there. And I didn’t really have much of a cohort when I started as well. There were two other guys starting at the same time as me, but they were doing industrial masters. So, I think it took me a little while to gain the confidence to just be really outspoken about this. But, by the end of my masters in beginning my PhD, I was like, “yeah, this is what I want to do and I’m going to try and talk to people about it and influence people.”

Michaël: When did you learn about alignment?

David: I’m not sure. Something I wanted to say earlier is, we’re talking about alignment, but I want to distinguish it from existential safety. I think alignment is a technical research area that may or may not be helpful for AI exponential safety. And it’s about, in my mind, getting the systems to have the right intention or understand what it is that you want them to do. Existential safety is the thing that I think I mostly am focused on and like to talk about in this context. I think they get interchanged a lot.

David: I learned about it before I started grad school, but I’m not sure how long before. My perspective before realizing that AGI might happen in my lifetime was basically… Maybe this will be a concern when AGI actually happens in the distant future. But, in the meanwhile everything is going to get really weird and probably really fucked up because we’re just going to have better and better automated systems of monitoring and controlling people basically. And those are going to be deployed probably in the interest of power or in, maybe something more a best case, they’re going to just fall victim to bad social dynamics. It’s still just not really optimizing for what we care about. ⬆

Harm From Technology Development Might Get Worse With AI

Michaël: Do you still agree with that view, a slow takeoff scenario?

David: I don’t know about this slow takeoff or hard takeoff thing. They’re both quite plausible. But even if we do not have an AGI, we would still have a lot of major issues from advancing narrow AI systems. That still seems right to me. I mean I think it’s hard to know.

Michaël: In our trajectory, where we’re at right now, do you think we will see harms from narrow systems for multiple years before we get to AGI or will we only get to those very negative harms when we’re very close to AGI? That’s the question. Is it a period of three or five years of society going crazy and AI systems being unaligned causing harm or is it just before crunch time?

David: I think things are already going crazy and have been for a while. So, I think the way that the world works and is set up, it doesn’t make sense. It’s nuts. And you look at just the way that money works and there’s just all of this money being created via financial instruments that is pretty detached from the fundamentals of the economy. And I think you look at the way that our collective epistemics work and a lot of people are just very badly misinformed about a lot of things and there are a lot of structural incentives to provide people some combination of the information that grabs their attention the most and also is helpful to people with a lot of power. That’s kind of how the information economy works for the most part.

Michaël: So you’re saying basically, it’s not very balanced in terms of how the money is created, how it’s spread over economy and information is also fed to people’s brains in a way that hacks their reward system and controls them or manipulates them and maybe AI will exacerbate both power imbalance or financial imbalance?

David: It will and it already has been, is the other thing. It’s not just AI, it’s the development of technology that is useful for understanding humans and predicting them, in general. So marketing, as a thing, is kind of a recent invention and it’s based to some extent, I think, on us having a better understanding of how to manipulate people and then having the technology to do that at scale for cheap. ⬆

Coordination

How We Could Lose Control And Lack Coordination

Michaël: Is your research focused on solving those short-term, current problems or more existential safety? So to be clear, existential safety is when we run a system, when we press play or something, the system takes over the world.

David: No, existential safety is much broader than that. So, I totally reject this dichotomy between the existential safety of the fast takeoff, the thing takes over the world immediately, and this slow takeoff of things get less and less aligned with human values and we’re more and more manipulated and optimizing at a societal level for the wrong things. Just increasing GDP even though we’re destroying the planet. Just kind of dumb.

Michaël: Because one causes the other. One can make the other much more likely. If humanity is going in the wrong direction then…

David: That’s part of it, is that that can lead to a situation where, ultimately, we lose control in the sense that no set of human beings, even if they were in some sense perfectly coordinated. It could change the course of the future. So that’s one sense of loss of control, that’s in the strongest sense, where even if everyone all got together and was like, “let’s just stop using technology, destroy the computers, unplug the internet, everything,” it would be too late. And the AI has its own factories or ability to persist in the physical world, even given our best attempts to destroy it.

David: That’s the strongest version. But there’s also a version of out of control AI, which is just out of control, I don’t know, climate change. Where it’s like, in some sense we could all coordinate and stop this but we’re not doing it. We probably aren’t going to do it to the extent that we should. And there’s no one person or small group of people who have the power to do this. It would take a huge amount of coordination that we don’t really have the tools. Okay, I probably have to walk this back because I don’t know enough about it. And to some extent it probably is something where it’s just, I’m not sure we need fundamentally new coordination tools to solve climate change. We might just need a lot more moral resolve on the part of people with power. But I think there are definitely a lot of gnarly coordination problems that are contributing in a massive way to climate change. ⬆

Low-Hanging Fruits In Coordination

Michaël: Is there any way to solve those coordination problems? Isn’t it basically intractable compared to other technical problems in AI?

David: No, I don’t think so. We know that there are voting systems that seem a lot better than the ones that we use in the United States anyways and I think are still really popular. People call it first-past-the-post, which is a terrible name because it’s actually whoever gets the plurality wins. So, the post isn’t even at some fixed point, but just the majority rule. Actually, plurality rule. Majority rule is also a bad name. Things like approval voting seem to just be basically robustly better for most contexts. And of course there are these impossibility results that say, no voting system is perfect, but you talk to people who actually study this stuff and it seems like pretty much everyone likes approval voting and would just sign off on using it everywhere that we’re using, whatever you should call this thing, majority rule, first pass post, plurality rule, let’s call it the X.

David: That’s what it is. So that’s just one simple example. I think there’s a lot of low-hanging fruit for doing more coordination, both at just the level of people talking to each other more and trying to understand each other and be more cooperative. So, let’s say internationally it seems like there’s surprisingly little in the way of connections between China and the West. I think you have a massive imbalance where a lot of people in China come to the West to study and to have a career and a lot of people there learn to speak English and you don’t really see the opposite. So there’s very little, I would say understanding, or even ability to understand what’s going on in China and what the people are like there, what the system is like there from people in the West.

Michaël: So Americans are not trying to understand China and it is kind of a one-sided relationship?

David: To a large extent. And why is that happening? I guess it’s for historic and power reasons where English is this lingua franca, that it just makes sense for people to learn. Whereas I think it would make sense from a lot of point of view, including individuals and maybe the US national interest for more people to learn Chinese. But it’s difficult and there’s not this obvious pressing need because stuff gets translated into English. That’s just one example. Then, I think we have coordination mechanisms that could be scaled up. Basically assurance contracts, which are things like Kickstarter; where you say, if I will agree to do this, let’s say I will agree to boycott this company for their unethical practices, assuming enough other people agree to do it. And until we have enough people we just won’t do the boycott.

David: That’s an assurance contract. So if you can monitor and enforce those contracts, then you can solve a lot of collective action problems because there’s essentially no cost to signing up for one of these, especially if you can do it in a non-public way. So you can’t be punished in advance for taking that political stance. And then once you have enough people who are on board with taking that action, then you actually take it. That’s why you need the monitoring enforcement part to make sure that people actually do follow through on their commitments. But, I think there’s tons of low-hanging fruit in that area.

Michaël: So some kind of centralized system where people can just pre-commit to some action if millions of people are boycotting some company. ⬆

Coordination Does Not Solve Everything, We Might Not Want Hiveminds

David: It doesn’t have to be centralized, could be decentralized. This is something that I’ve been thinking about for a long time and hesitant to talk about. Because I think it also could pose existential risks. Because I think at the end of the day it’s not just a problem of coordination, it’s also coordinating in the right way or for the right things for something. So if you think about an hive, it is very well coordinated, but we probably don’t want to live in a future where we’re all basically part of some hive mind. I mean, I don’t know. Hard to say definitive things about that, but I’m sure a lot of people right now would look on that future with a lot of suspicion or disgust or displeasure. We should try and avoid a situation where we just end up in that kind of future without realizing it or unintentionally or something. I think better coordination without thoughtfulness about how it’s working could potentially lead to that.

Michaël: Are you basically saying that we need to do enough coordination to not build unsafe AGI, but not too much because otherwise we’ll end up being just one mind and then some people might prefer to be individuals and not one giga brain?

David: I think the second possible failure mode is really speculative and weird and I don’t know how to think about it. I just think it’s something that we should at least be aware of. But I think we definitely need to do enough coordination to not build AI that could take over the world and kill everybody if that looks like it’s a real possibility. Which in my opinion, yes, that’s something we should figure out and worry about. ⬆

Advanced AI Systems Should Be Able To Solve Coordination Problems Amongst Themselves

Michaël: I think some people in the open source community just have this different scenario from people in the safety community. Whereas people in the safety community will think of one agent being vastly smarter than other agents and this agent might transform the earth into some giant computer. Whereas people in the open source community might think that we might get different agents, multiple scenarios where if you open source everything then maybe we get different levels and different balance each other out. Do you believe we are going to get a bunch of different AIs balancing each other or one agent smarter than the others?

David: I don’t have a super strong intuition about this. I think the agents balancing each other out thing is not necessarily better from an existential safety point of view. There are also these arguments that I find fairly compelling, although not decisive saying if you have highly advanced AI systems, they should be able to solve coordination problems amongst themselves. So even if you start out with multiple agents, they might form a hivemind basically because they can solve these coordination problems that humans haven’t been able to solve as effectively. So one reason why it might be easier for AI systems to coordinate is because they might be able to just look at each other’s source code and you know, you have to ensure that you’re actually looking at the real code and they aren’t showing you fake code and stuff like this. But it seems plausible that we could end up in a situation where they can all see each other’s source code and then you can basically say, is this somebody who is going to cooperate with me if I cooperate with them?

Michaël: So one scenario I have in my mind is just if we deploy a bunch of those Action Transformers from Adept that can do requests on the web, and I know they kind of understand that the other person they’re interacting with is an AI as well. And so you can have those millions of agents running into this at the same time. Is this basically what you’re saying? You get those things that identify each other through requests and communicate to each other.

David: I’m not sure I’ve understood, but it sounds like a more specific version of what I’m saying basically. ⬆

David’s Approach To Alignment Research

The Difference Between Makers And Breakes, Or Why David Does Not Have An Agenda

Michaël: I think a lot of the people that asked me questions on Twitter were kind of more interested in your research agenda for solving existential safety and not other takes you might have. One thing that’s kind of very specific about yourself is that you don’t have a specific agenda, but you have a bunch of PhD students doing a bunch of different things. And that’s one thing. The other thing is you often say that you’re more of a breaker than a maker. You prefer to break systems than build systems. Maybe I’m misrepresenting your view, can you elaborate on this?

David: Totally. I’ve been thinking about this stuff for a long time, more than 10 years now, and it’s always seemed like a really hard problem and I don’t see any super promising paths towards solving it. I think there are these Hail Mary things where it’s like, “oh yeah, maybe this will work,” and it makes sense to try, probably. But for all of those, none of them I think are anywhere close to addressing this justified confidence issue that we talked about. The ones that look like they might have a chance of working in the near term are mostly just, “let’s just cross our fingers and just hope that the thing is aligned. We’ll train it to do what we want and hopefully it does.” So I’m not very optimistic about those approaches.

David: I think, like I said, maybe it works, but I’m more interested in providing strong evidence or reasons and arguments for why these approaches aren’t going to work. So my intuition is like, it’s probably not going to work. Probably things are going to go wrong for some reason or another. Seems like there are a lot of things that’ll have to go right in order for this to work out. So that’s the maker breaker thing that you’re talking about. And this is a recent way that I’ve thought about framing my research and what I’m doing. So I’m in the process of maybe trying to develop more of an agenda. I’ve historically been a little bit, I don’t know, anti-agenda or skeptical of agendas or the way that people talk about it right now in the rationalist community or something like this. ⬆

Deference In The Existential Safety Community

David: The online non-academic existential safety community just seems kind of weird. Playing into some dysfunctional dynamics of people who don’t really understand stuff well enough. So they defer a bunch to other people. And the people who they defer to are, it seems like a lot of it is social and trendy and very caught up in whatever’s going on in the bay. And of course then there’s also influence from tons of billionaire money. I mean maybe not anymore in the same way, so I think the epistemic of the community get distorted by all these things. There are some things where it ends up looking a little bit like these social science fields where it’s more about cult personality and who is hot and who knows who and who you’re trying to interpret, “oh, did Paul mean this by this or did he mean that?”

David: And you have these debates that aren’t really about the object level question. I can go on and on about the gripes that I have with these this community. And to some extent I’m kind of picking on them because I guess it’s just some recent interactions I’ve had have updated me towards feeling like there’s more dysfunction than I would have thought. And I’m not that well plugged in, so I can’t say how much I’m being unfair or generalizing too much or something. But this is just my impression from some recent interactions.

Michaël: Recent events or just personal interactions?

David: I think it’s personal interactions, it’s events, it’s reading things online, a lot of it is second or third hand, it’s not even me personally having bad interaction with somebody, it’s other people complaining to me about their interactions. So that’s why I have to caveat it and say maybe some of this is pretty off base and I don’t want to tar the whole community. But what were we talking about? Agendas?

Michaël: Yeah. ⬆

How David Approaches Research In His Lab

David: I think the way that people talk about agendas and everyone asking me about agendas feels like a little bit weird. Because I think people often have some agenda. But I think agenda is a very grandiose term to me. It’s oftentimes, I think people who are at my level of seniority or even more senior in machine learning would say, “oh, I’m pursuing a few research directions.”

David: And they wouldn’t say, “I have this big agenda.” And so I think my philosophy or mentality, I should say, when I set up this group and started hiring people was like, let’s get talented people. Let’s get people who understand and care about the problem. Let’s get people who understand machine learning. Let’s put them all together and just see what happens and try and find people who I want to work with, who I think are going to be nice people to have in the group who have good personalities, pro-social, who seem to really understand and care and all that stuff.

David: It’s not this top down I have the solution for alignment and I just need an army of people to implement it. I’ve described it as something of a laissez-faire approach to running the lab. To some extent I have that luxury because of getting funding from the existential safety funders. So they’re not placing a lot of demands on me in terms of the outputs of the research, which is, I think, pretty unusual. I’m pretty lucky to have that compared to a lot of academics. I think a lot of times in academia you have a lot more pressure to produce specific things because that’s what the funding is asking for.

Michaël: So you have both the, let’s say, flexibility of choosing what research you want to do, but at the same time you’re trying to go for the rigor of the original academic discipline? ⬆

Testing Intuitions By Formalizing Them As Research Problems To Be Investigated

David: One thing, if you look at the people arguing about this stuff in the bay and online and in person and this non-academic existential safety community, which includes some people who are in academia as well, but you know who I’m talking about. Anyways, if you look at what’s happening there, people just have very different intuitions and they argue about them occasionally and then they go away with their different intuitions and then they go off and take actions based on their intuitions. And it kind of struck me at some point that “hey, maybe we can actually do research on these problems and try and understand.” So we talked earlier about this example of the AI in a box and does it learn to reverse engineer the outside world. So that’s something where people have really different intuitions and we can just approach that as a research problem.